Prompt Flow

Today, I’m going to delve into a topic that most of you probably haven’t heard of. I myself only learned about it through a presentation by Microsoft, and I’m quite impressed with what I’ve seen. But presentations usually make everything look great, so I wanted to put the tool to the test myself.

But what exactly is Prompt flow? Here’s the definition from Microsoft itself:

Prompt flow is a suite of development tools designed to streamline the end-to-end development cycle of LLM-based AI applications, from ideation, prototyping, testing, evaluation to production deployment and monitoring. It makes prompt engineering much easier and enables you to build LLM apps with production quality.

In English:

Prompt Flow is a suite of development tools that aims to optimize the entire development cycle of AI applications based on large language models (LLMs), from ideation, prototyping, testing, evaluation to production deployment and monitoring. It significantly simplifies prompt engineering and allows you to build LLM applications with production quality.

PromptFlow, as mentioned above, is from Microsoft and is mostly written in Python. The project is still relatively young and looks very promising. But enough theory, let’s take a look at Promptflow in practice.

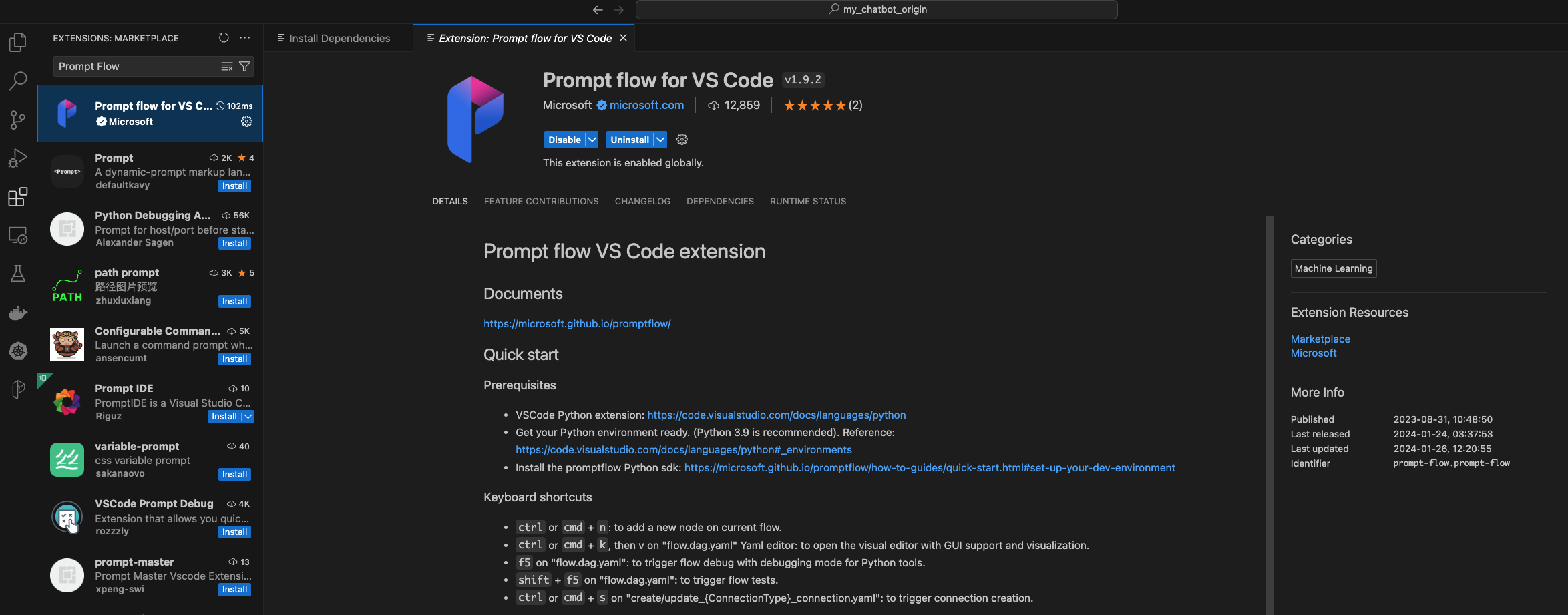

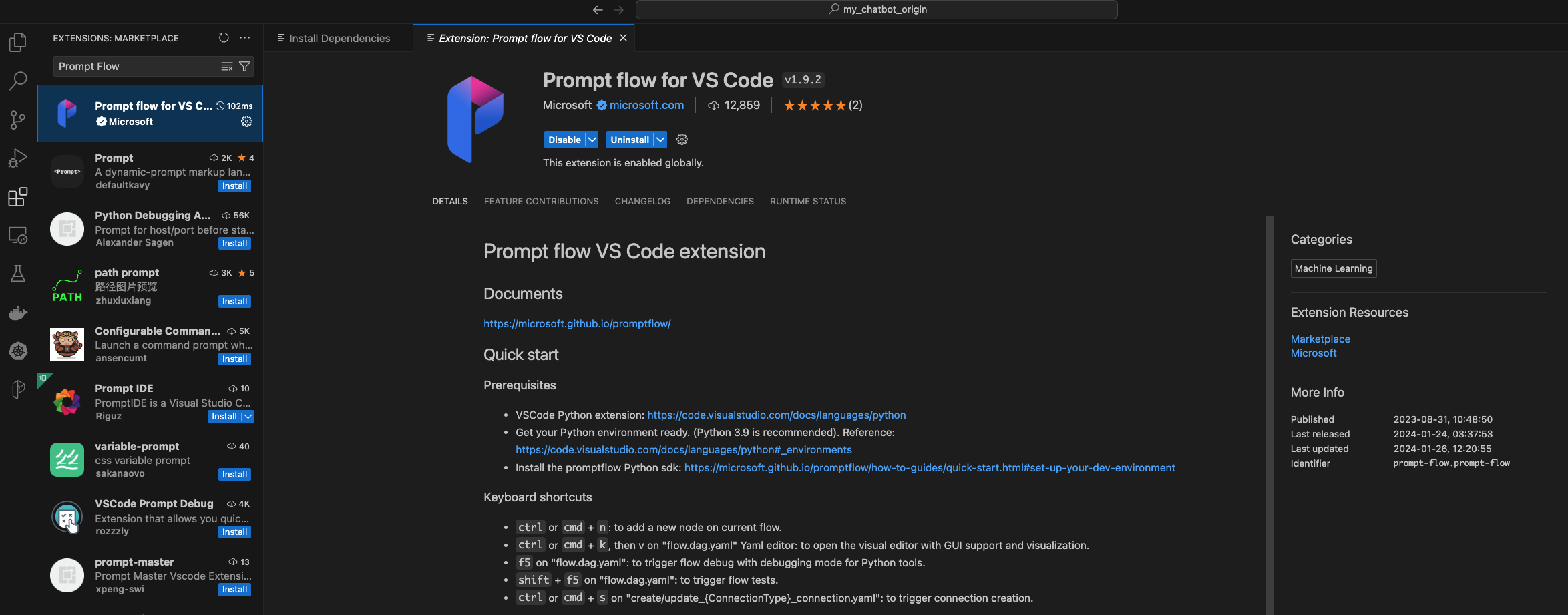

On the Promptflow Github page, there’s a YouTube video that I followed in detail. It shows the use of PromptFlow in Visual Studio Code. There’s a plugin that’s supposed to help with creating a project with PromptFlow. However, the steps in the video are sometimes very jumpy, which led to some confusion for me.

When I look at the PromptFlow extension, I see that Python version 3.9 is listed as recommended.

So, install the specified version on the Mac, if not already present, and let’s get started.

1

|

brew install python@3.9

|

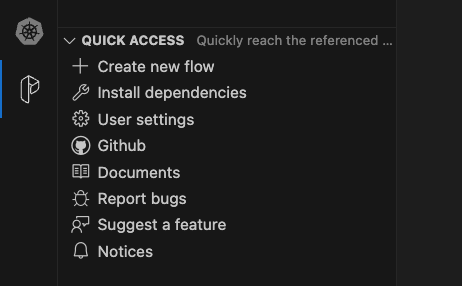

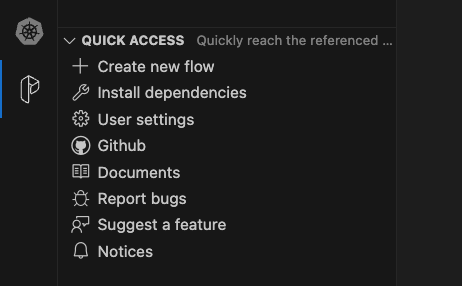

Now I install the Visual Studio Code extension. After I’ve done that, I see a new icon in the left menu bar. If I click on it, I have the option to create a new flow.

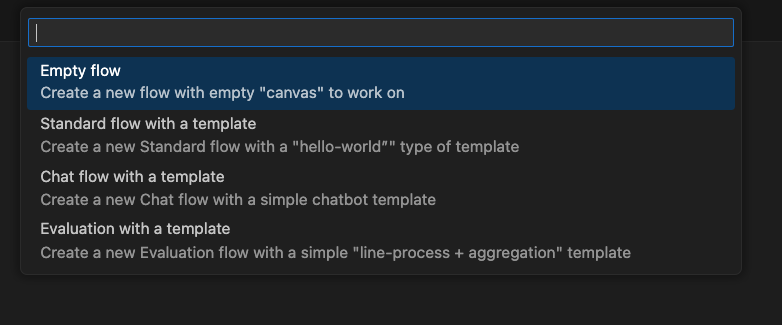

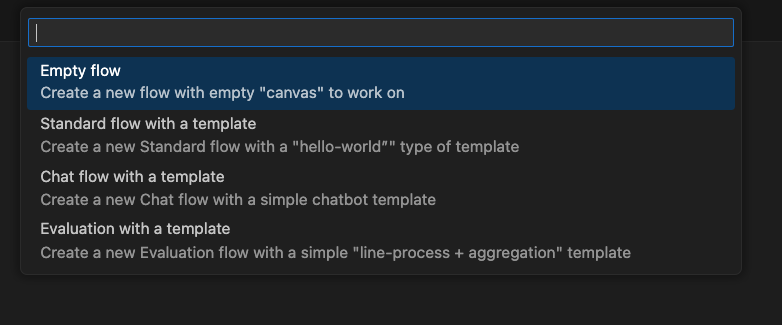

I select “Chat flow with a template” in the pop-up window and specify the path to the folder where I want to create the new flow.

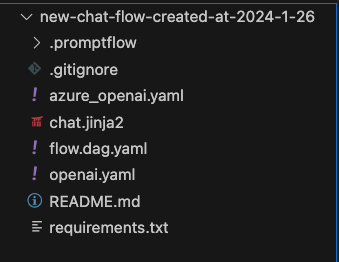

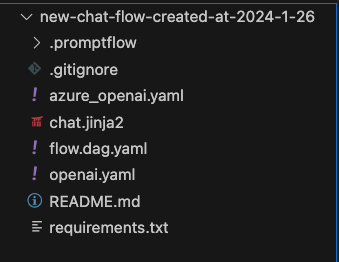

Once that’s done, a new project opens in VS Code with the following structure:

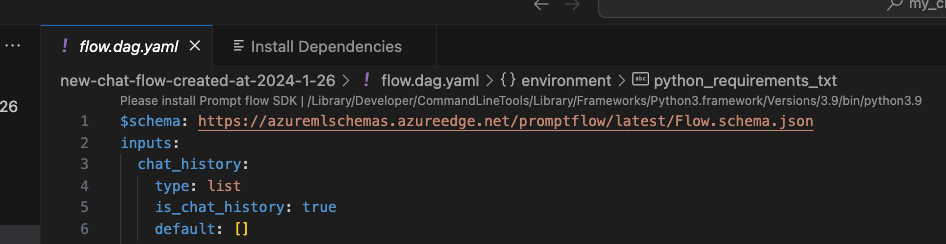

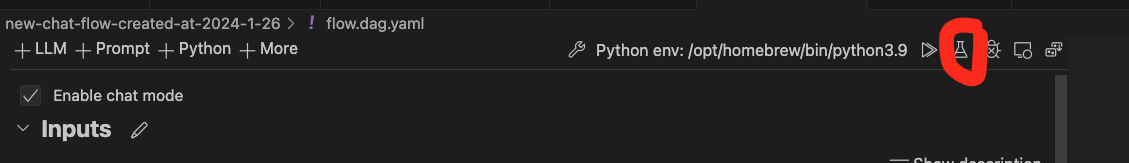

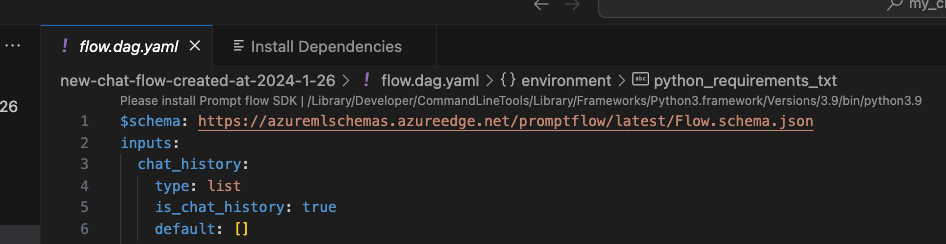

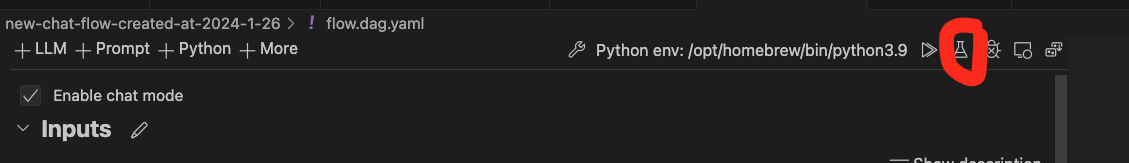

We’ll take a closer look at the files later. According to the video, we should now go to the file flow.dag.yaml and open the Visual Editor there.

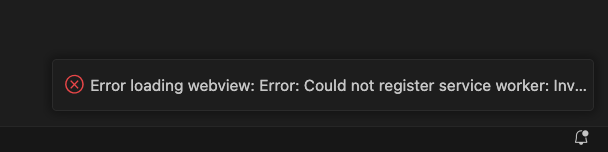

So, as suggested, I try to install the Prompt flow SDK first, unfortunately without success. The following error message appears:

“Error loading webview: Error: Could not register service worker”

At this point, I have to stop the video and try to fix this error first.

A quick Google search and the first suggested solution to kill all Visual Studio Code processes already brings the desired effect.

1

2

3

4

5

6

7

8

9

10

|

~ ❯ ps -ef | grep "Visual Studio Code" ✘ PIPE|2

503 92460 1 0 15Jan24 ?? 4:48.20 /Applications/Visual Studio Code.app/Contents/MacOS/Electron

503 92467 1 0 15Jan24 ?? 0:00.32 /Applications/Visual Studio Code.app/Contents/Frameworks/Electron Framework.framework/Helpers/chrome_crashpad_handler --no-rate-limit --monitor-self-annotation=ptype=crashpad-handler --database=/Users/swelsch/Library/Application Support/Code/Crashpad --url=appcenter://code?aid=de75e3cc-e22f-4f42-a03f-1409c21d8af8&uid=908f5b52-dad0-4b0f-b1f7-fee5f14fb27a&iid=908f5b52-dad0-4b0f-b1f7-fee5f14fb27a&sid=908f5b52-dad0-4b0f-b1f7-fee5f14fb27a --annotation=IsOfficialBuild=1 --annotation=_companyName=Microsoft --annotation=_productName=VSCode --annotation=_version=1.81.0 --annotation=exe=Electron --annotation=plat=OS X --annotation=prod=Electron --annotation=ver=22.3.18 --handshake-fd=28

503 92468 92460 0 15Jan24 ?? 51:33.40 /Applications/Visual Studio Code.app/Contents/Frameworks/Code Helper (GPU).app/Contents/MacOS/Code Helper (GPU) --type=gpu-process --user-data-dir=/Users/swelsch/Library/Application Support/Code --gpu-preferences=UAAAAAAAAAAgAAAIAAAAAAAAAAAAAAAAAABgAAAAAAAwAAAAAAAAAAAAAAAQAAAAAAAAAAAAAAAAAAAAAAAAACgFAAAAAAAAKAUAAAAAAAC4AQAANgAAALABAAAAAAAAuAEAAAAAAADAAQAAAAAAAMgBAAAAAAAA0AEAAAAAAADYAQAAAAAAAOABAAAAAAAA6AEAAAAAAADwAQAAAAAAAPgBAAAAAAAAAAIAAAAAAAAIAgAAAAAAABACAAAAAAAAGAIAAAAAAAAgAgAAAAAAACgCAAAAAAAAMAIAAAAAAAA4AgAAAAAAAEACAAAAAAAASAIAAAAAAABQAgAAAAAAAFgCAAAAAAAAYAIAAAAAAABoAgAAAAAAAHACAAAAAAAAeAIAAAAAAACAAgAAAAAAAIgCAAAAAAAAkAIAAAAAAACYAgAAAAAAAKACAAAAAAAAqAIAAAAAAACwAgAAAAAAALgCA

503 92470 92460 0 15Jan24 ?? 0:46.38 /Applications/Visual Studio Code.app/Contents/Frameworks/Code Helper.app/Contents/MacOS/Code Helper --type=utility --utility-sub-type=network.mojom.NetworkService --lang=en-GB --service-sandbox-type=network --user-data-dir=/Users/swelsch/Library/Application Support/Code --standard-schemes=vscode-webview,vscode-file --enable-sandbox --secure-schemes=vscode-webview,vscode-file --bypasscsp-schemes --cors-schemes=vscode-webview,vscode-file --fetch-schemes=vscode-webview,vscode-file --service-worker-schemes=vscode-webview --streaming-schemes --shared-files --field-trial-handle=1718379636,r,9508796899792474748,12606522904689404813,131072 --disable-features=CalculateNativeWinOcclusion

503 92791 92460 0 15Jan24 ?? 1:00.49 /Applications/Visual Studio Code.app/Contents/Frameworks/Code Helper.app/Contents/MacOS/Code Helper --type=utility --utility-sub-type=node.mojom.NodeService --lang=en-GB --service-sandbox-type=none --user-data-dir=/Users/swelsch/Library/Application Support/Code --standard-schemes=vscode-webview,vscode-file --enable-sandbox --secure-schemes=vscode-webview,vscode-file --bypasscsp-schemes --cors-schemes=vscode-webview,vscode-file --fetch-schemes=vscode-webview,vscode-file --service-worker-schemes=vscode-webview --streaming-schemes --shared-files --field-trial-handle=1718379636,r,9508796899792474748,12606522904689404813,131072 --disable-features=CalculateNativeWinOcclusion,SpareRendererForSitePerProcess

503 92815 92460 0 15Jan24 ?? 1:17.09 /Applications/Visual Studio Code.app/Contents/Frameworks/Code Helper.app/Contents/MacOS/Code Helper --type=utility --utility-sub-type=node.mojom.NodeService --lang=en-GB --service-sandbox-type=none --user-data-dir=/Users/swelsch/Library/Application Support/Code --standard-schemes=vscode-webview,vscode-file --enable-sandbox --secure-schemes=vscode-webview,vscode-file --bypasscsp-schemes --cors-schemes=vscode-webview,vscode-file --fetch-schemes=vscode-webview,vscode-file --service-worker-schemes=vscode-webview --streaming-schemes --shared-files --field-trial-handle=1718379636,r,9508796899792474748,12606522904689404813,131072 --disable-features=CalculateNativeWinOcclusion,SpareRendererForSitePerProcess

503 92927 1 0 15Jan24 ?? 0:00.03 /Applications/Visual Studio Code.app/Contents/Frameworks/Squirrel.framework/Resources/ShipIt com.microsoft.VSCode.ShipIt /Users/swelsch/Library/Caches/com.microsoft.VSCode.ShipIt/ShipItState.plist

503 74893 74444 0 1:35PM ttys004 0:00.00 grep --color=auto --exclude-dir=.bzr --exclude-dir=CVS --exclude-dir=.git --exclude-dir=.hg --exclude-dir=.svn --exclude-dir=.idea --exclude-dir=.tox Visual Studio Code

~ ❯ kill -9 92460

|

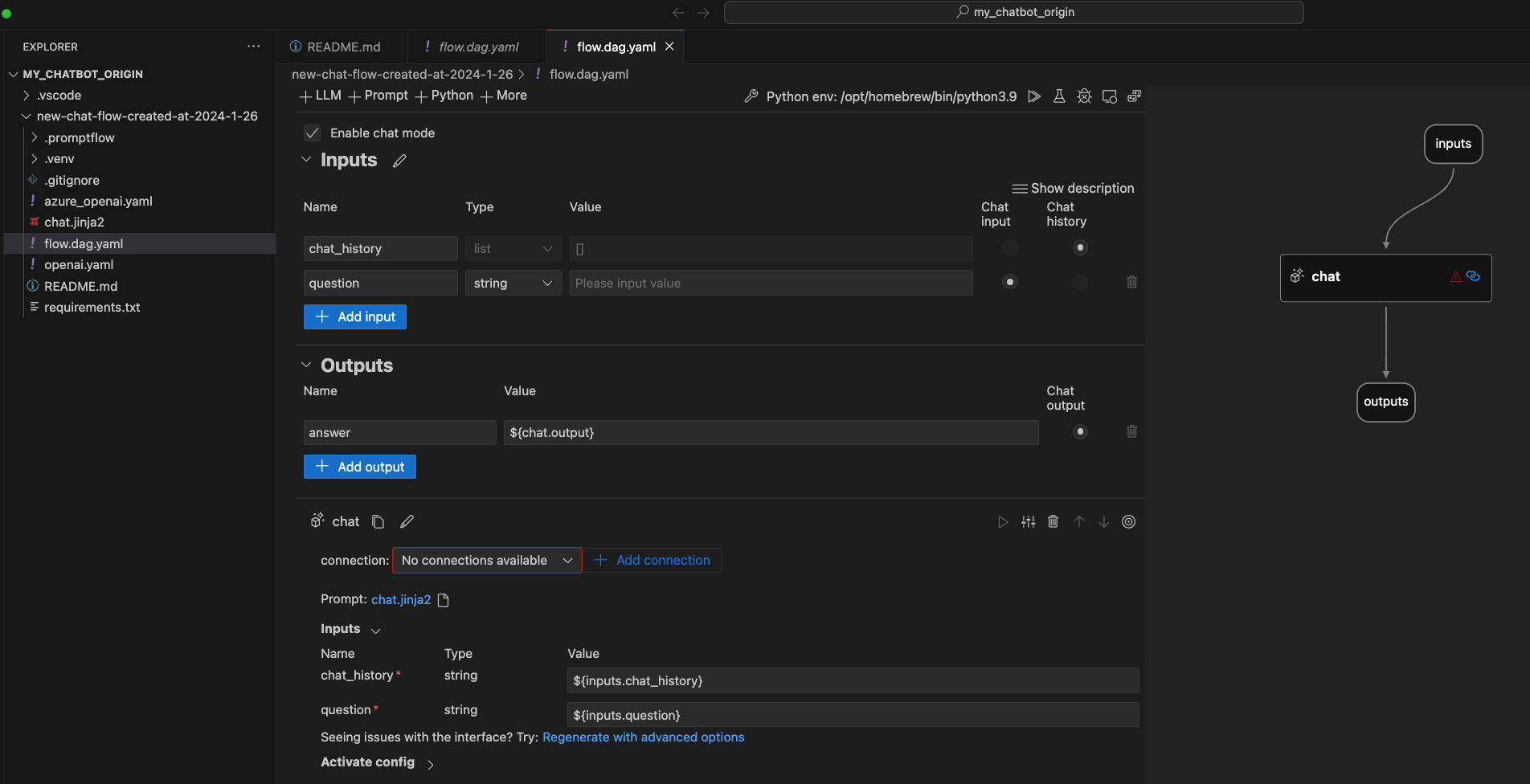

Now I can install the SDK, or rather, I get a page where I can find information on what needs to be done.

As mentioned above, I first tried to use a virtual environment. However, this was unsuccessful, and I then switched the Python interpreter to /opt/homebrew/bin/python3.9. With that, all further steps then worked successfully.

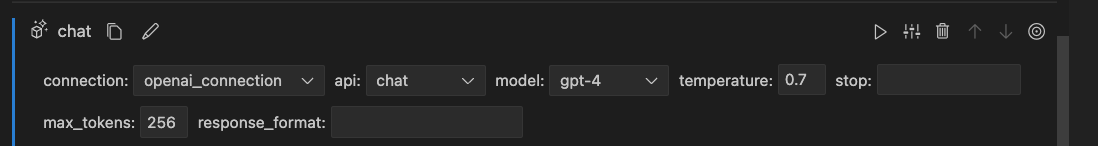

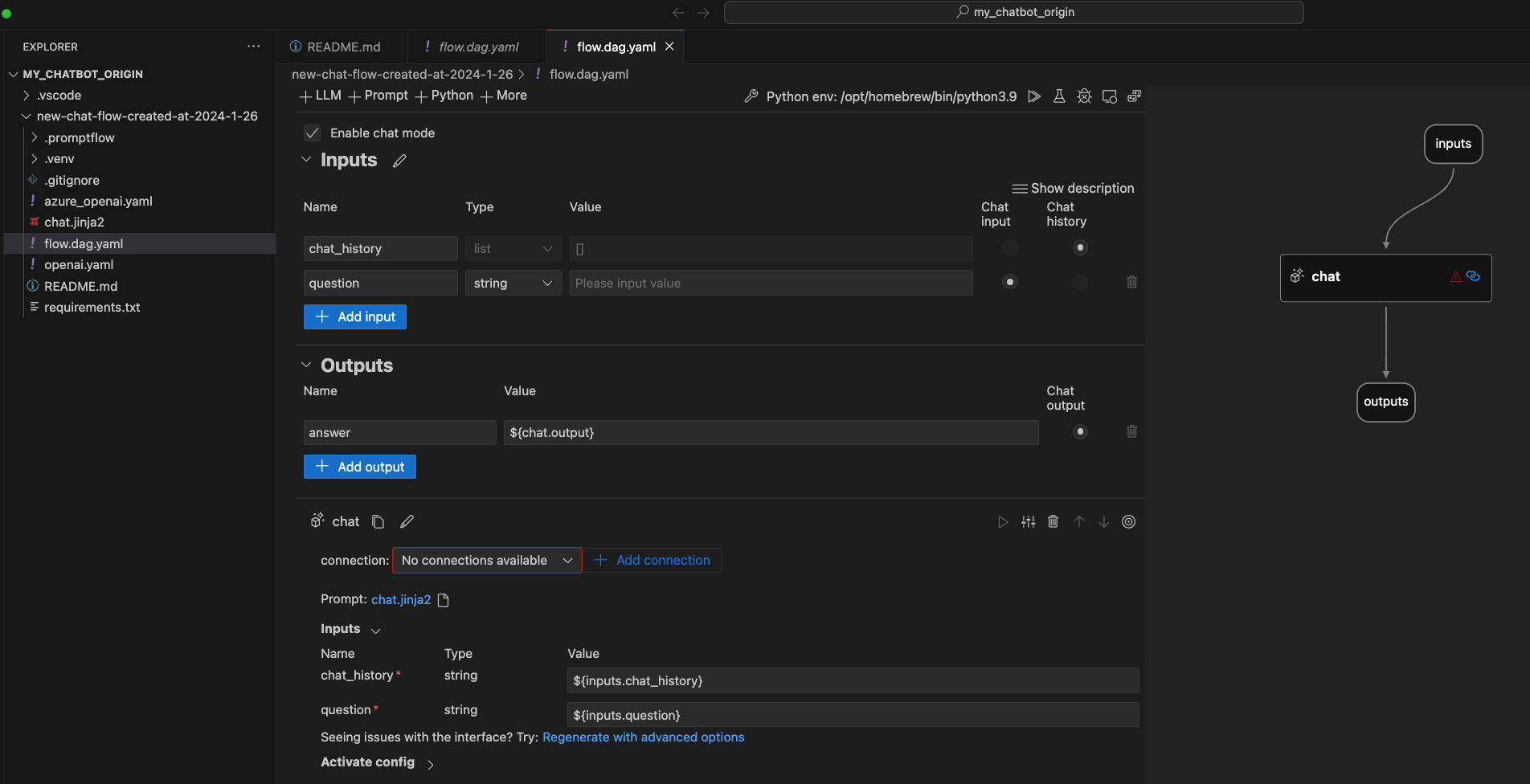

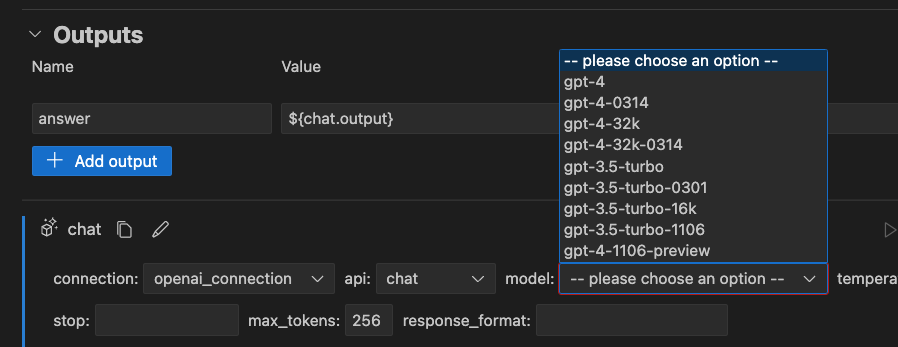

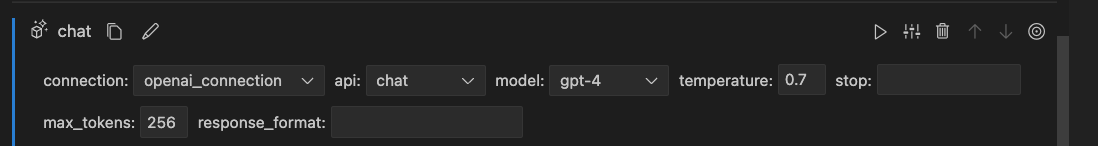

As expected, the Visual Editor opens, and we see our first flow.

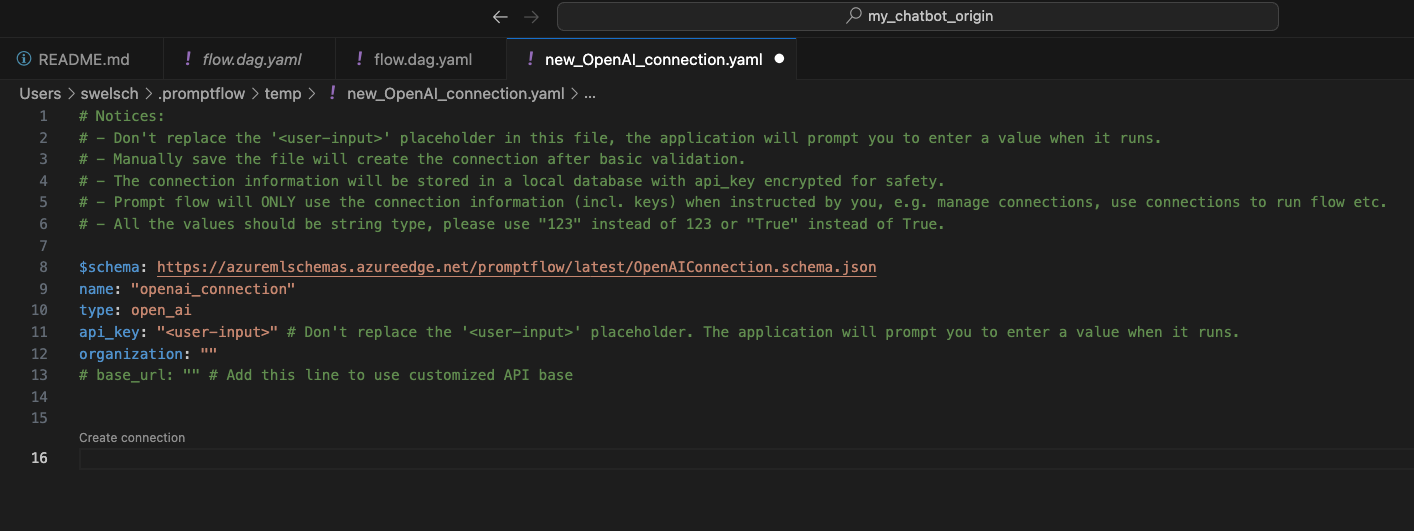

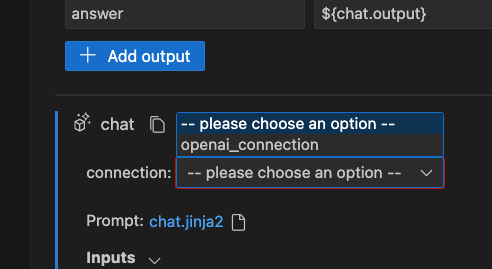

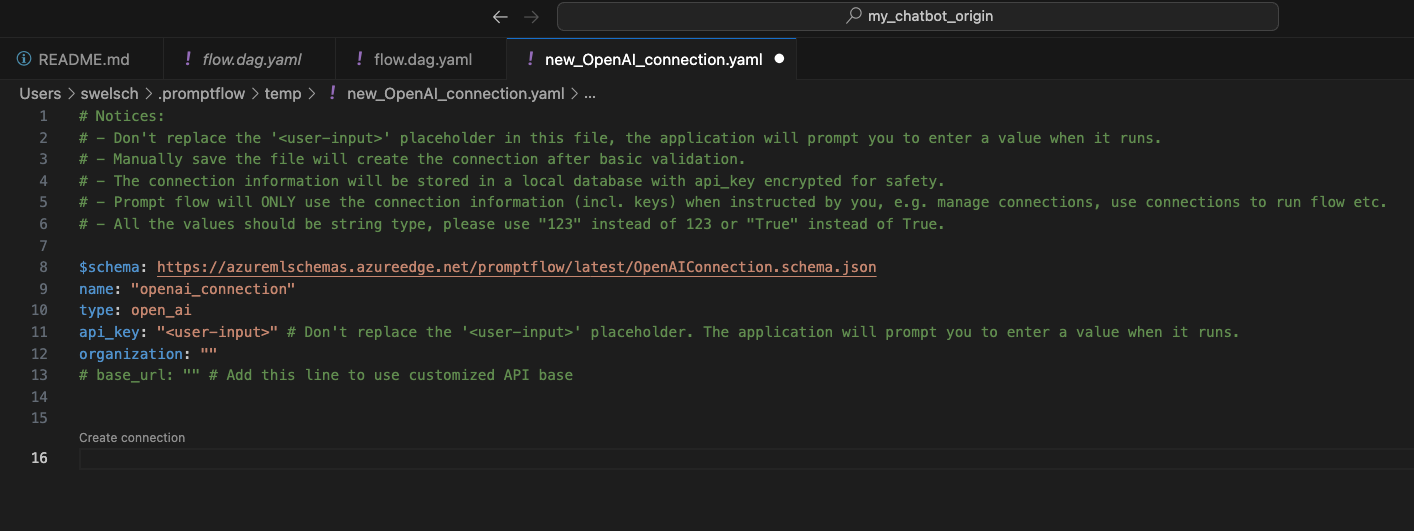

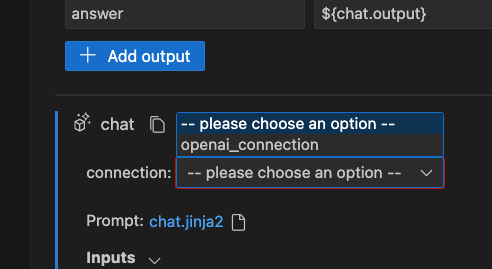

Now let’s first create a connection. There are two ways to do this. Either directly via Visual Studio Code or via CLI(https://microsoft.github.io/promptflow/how-to-guides/manage-connections.html). We want to create one via Visual Studio Code first. To do this, we click on the “Add Connection” button. Then we have to choose in the popup whether we want to use OpenAI or Azure OpenAI. I don’t have an Azure OpenAI account, so I choose OpenAI. Now we are shown a yaml file where we still have to enter the name of the connection.

Under line 15, we now see the “Create Connection” button with which we can create the connection. It takes a few seconds, and you can see in the terminal that it needs an API key. You have to create this directly at OpenAI (openai.com)

Once we have entered this, our new connection appears in the VisualEditor.

When clicking on the connection, we now have to select a model to work with. For cost reasons, I’ll choose gpt-3.5-turbo here. But you can choose freely according to your wishes.

Ok, we have now prepared everything so far. So let’s start the first tests with Promptflow! In our project folder, there is a file called chat.jinja2, where we can specify the prompt we want to test.

1

2

3

4

5

6

7

8

9

10

11

12

|

system:

You are a helpful assistant.

{% for item in chat_history %}

user:

{{item.inputs.question}}

assistant:

{{item.outputs.answer}}

{% endfor %}

user:

{{question}}

|

Let’s make the system prompt a bit more exciting and change it as follows. We want to find a suitable name for an animal that fits well with the owners’ names.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

system:

Create a list of pet names that match their owners' names.

Focus on puns, rhymes, or thematic connections that creatively link

to the owner's name. Aim to find fitting and original names for various

types of pets.

{% for item in chat_history %}

user:

{{item.inputs.question}}

assistant:

{{item.outputs.answer}}

{% endfor %}

user:

{{question}}

|

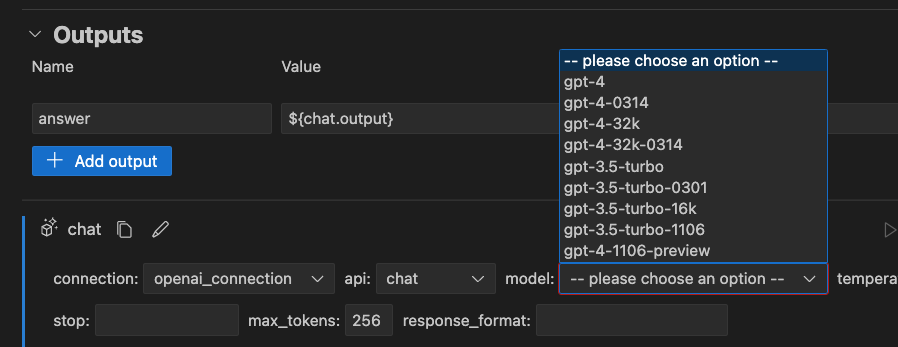

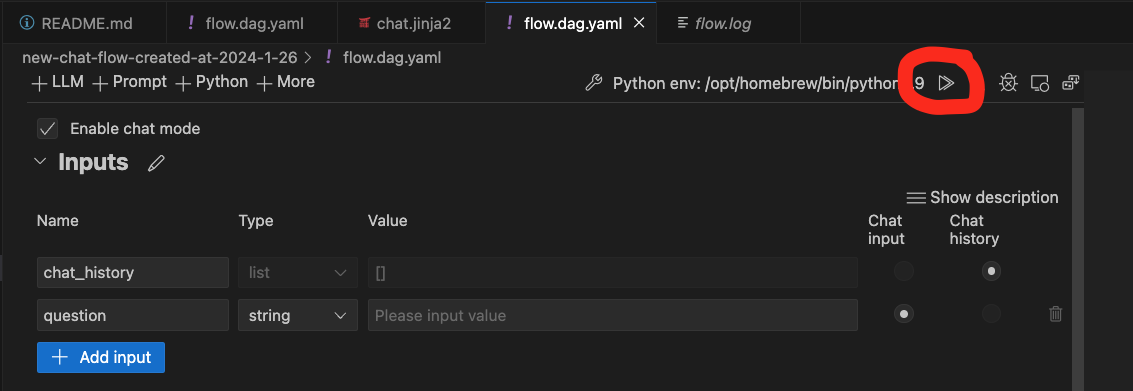

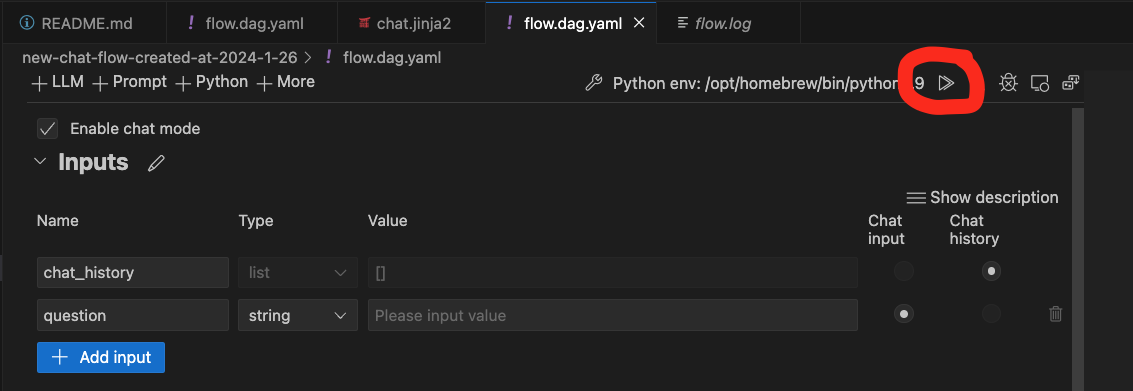

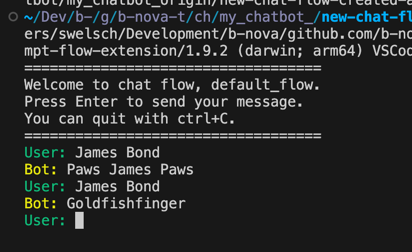

Now we go back to the Visual Editor and click on “Run it with interactive mode (text only)”

In the terminal, promptflow is now executed and wants user input. I now enter a fictitious name and press “Enter”

As we can see, we get quite fitting names for our pets. ;-)

1

2

3

4

5

6

7

8

9

10

|

pf flow test --flow . --inputs question='James Bond' 4s

2024-02-06 07:58:59 +0100 34066 execution.flow INFO Start executing nodes in thread pool mode.

2024-02-06 07:58:59 +0100 34066 execution.flow INFO Start to run 2 nodes with concurrency level 16.

2024-02-06 07:58:59 +0100 34066 execution.flow INFO Executing node chat-gpt3.5. node run id: 75e9f4bf-3d1b-49dd-86ac-58f6a3632cb8_chat-gpt3.5_0

2024-02-06 07:58:59 +0100 34066 execution.flow INFO Executing node chat-gpt4. node run id: 75e9f4bf-3d1b-49dd-86ac-58f6a3632cb8_chat-gpt4_0

2024-02-06 07:59:00 +0100 34066 execution.flow INFO Node chat-gpt4 completes.

2024-02-06 07:59:02 +0100 34066 execution.flow INFO Node chat-gpt3.5 completes.

{

"answer": "Canine Royale"

}

|

Now some of you might think: “Well, great, I can do that directly in ChatGPT too”. True, so now we come to the next feature that ChatGPT no longer offers out-of-the-box.

Imagine we now have answers that we expect for certain questions. This is often the case when we feed the general LLM with additional data, but it can also be relevant if I want to force answers in a certain way.

For example, if I want to use ChatGPT to build a chatbot around a company, then I expect certain answers to a specific user request, or specific company-internal answers that the LLM does not have direct access to.

These could be the following questions, for example:

Apple:

Q: What is the latest device?

A: IPhone XX

Google:

Q: With which service can I implement this and that?

A: Output of the specific service

b-nova:

Q: What is the most drunk beverage among your employees?

A: Water 😅

Or if I want to make sure that answers are limited to certain areas, then I have to formulate my prompt accordingly. For example:

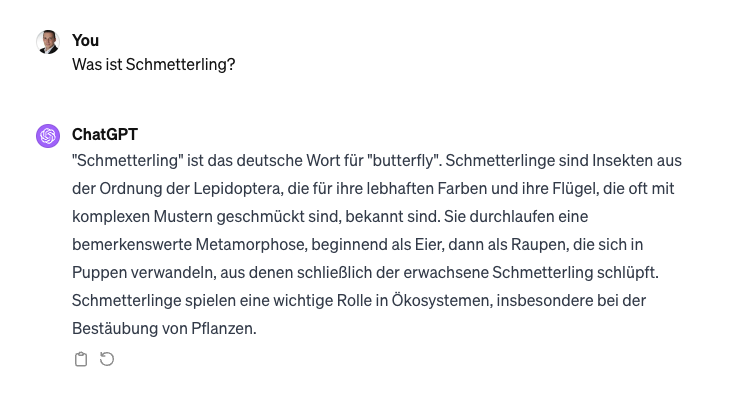

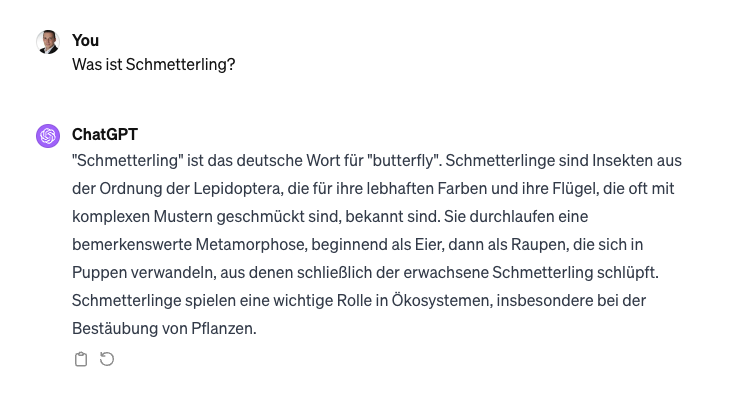

I want to know what exactly butterfly means in the context of swimming. If I now enter the following in ChatGPT: “What is butterfly?”, I get roughly the following answers:

So I now change my context and give ChatGPT the additional information that I would like to reduce the answer to the area of swimming. Here Promptflow now helps us quite easily. We saw above that we can simply change the system prompt in the chat.jinja2 file to equip our chatbot with our own functionalities.

So we change our system prompt as follows and ask the same question again

1

2

3

4

5

6

7

8

9

10

11

12

|

system:

You are a chatbot that focuses exclusively on swimming.

{% for item in chat_history %}

user:

{{item.inputs.question}}

assistant:

{{item.outputs.answer}}

{% endfor %}

user:

{{question}}

|

Now we want to test our new prompt. This time I use the CLI:

1

2

3

4

5

6

7

8

9

|

pf flow test --flow . --inputs question='Was ist Schmetterling?'

"answer": "Der Schmetterling (also known as \"Schmetterlingsschwimmen\"

or \"Butterfly\") is a swimming technique in which both arms are simultaneously

stretched forward over the water in front of the body and then simultaneously

pulled down and back, while the legs simultaneously kick up and down. The

butterfly is a demanding technique that requires a lot of strength and

coordination. It is one of the four official swimming techniques used in

competitions."

|

As we can see, this prompt gives us the desired answer. So we can use promptflow wonderfully for prompt engineering. Now, in the next step, we might also want to know how other models would have answered, or who gives us the more precise answer to the question. Let’s easily switch this to gpt-4 in Promptflow and look at the answer again:

1

2

3

4

5

6

7

8

|

pf flow test --flow . --inputs question='Was ist Schmetterling?'

"answer": "Schmetterling is a swimming technique in swimming,

also known as \"Schmetterlingsschwimmen\". It is one of the four

main techniques and is often considered the most demanding. In

butterfly swimming, both arms move forward simultaneously and then

simultaneously backward, while the legs make a dolphin-like movement.

This technique requires strength, coordination, and endurance."

|

Pretty cool, isn’t it?

Now let’s go one step further. Next, we don’t just want to test a single question, but several at once. PromptFlow also offers a function for this. We can make several queries directly via batch.

To do this, we create a file data.jsonl with the following content:

1

2

3

|

{"question": "Was ist Schmetterling?"}

{"question": "Was ist Brust"}

{"question": "Was is Rücken?"}

|

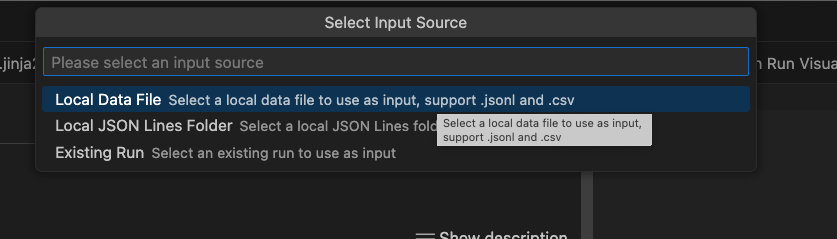

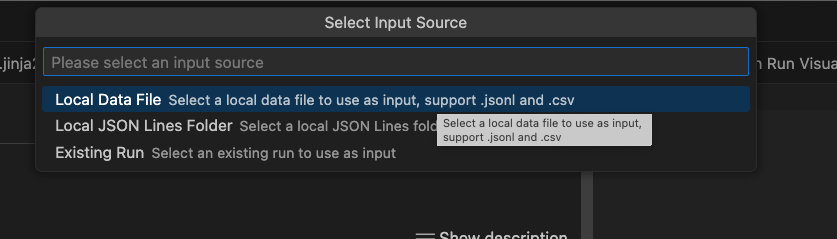

We also want to have these 3 questions evaluated in the context of swimming. Again, there is a button in the UI with which we can start the run (later I will show you the same thing via CLI, so remember this point ;-) ).

After a click, a popup appears asking for the source.

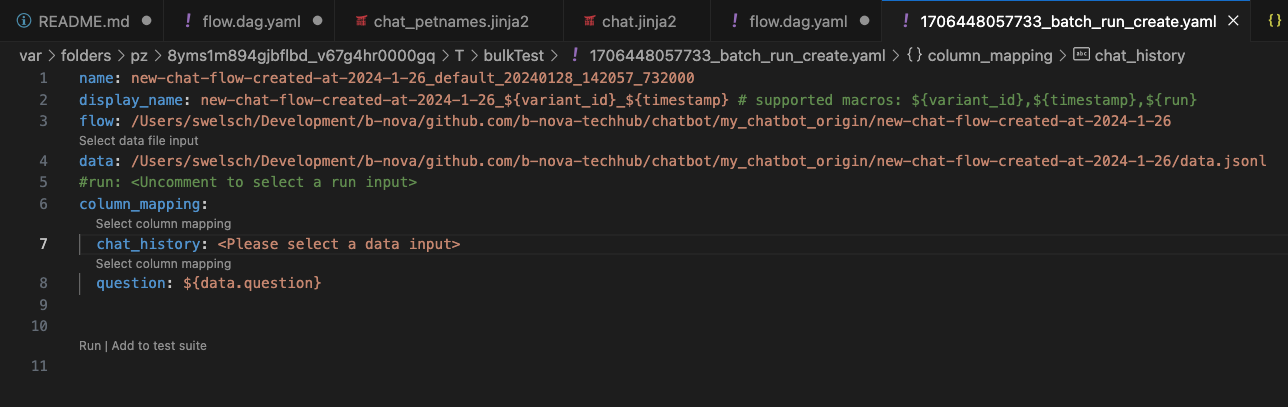

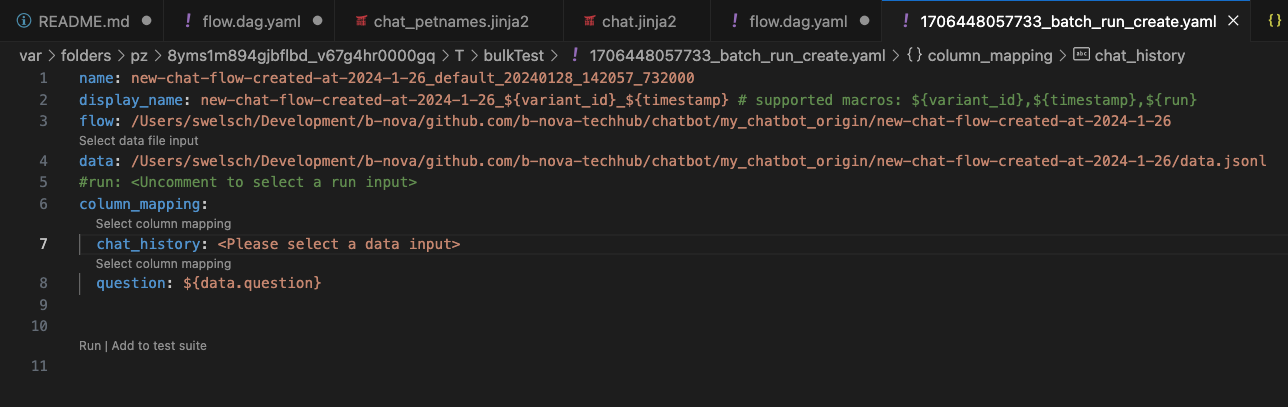

If we click on “Local Data File”, we can select the corresponding data.jsonl file that we just created. Then a batch_run.yaml file is created, in which we still have to specify a column mapping.

As chat_history, we simply specify an empty array ([]) because we don’t want to process the chat history. Once we have completed the configuration, we can click on “Run” in the same window (i.e. under line 10). A log file opens, and the process starts.

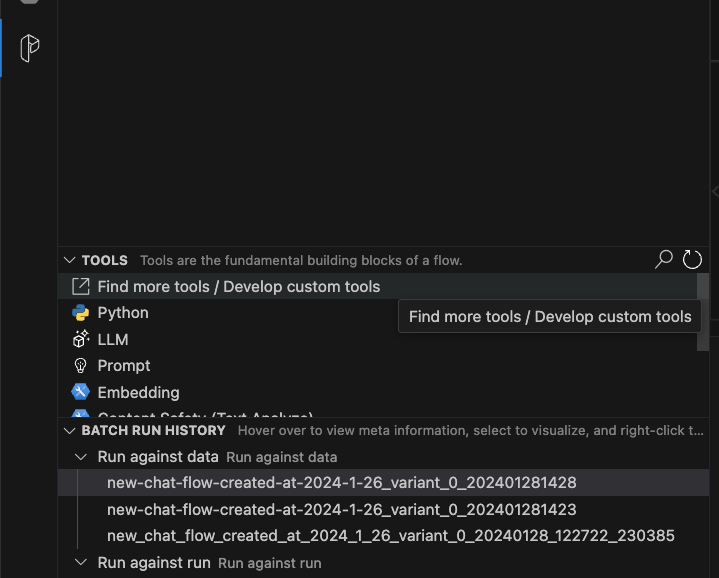

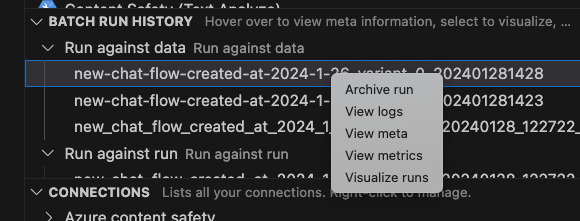

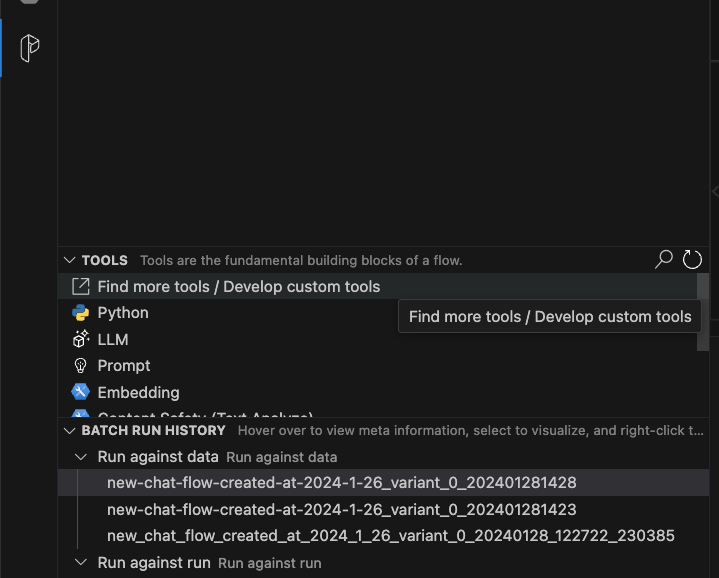

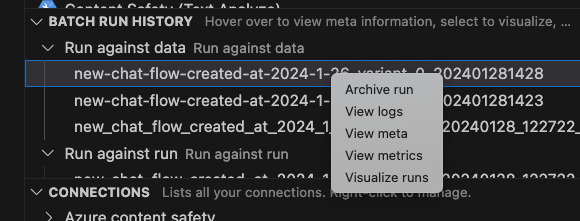

If everything has run through successfully, we see a new entry on the left side under “BATCH RUN HISTORY”

With a right-click, we can click on the first entry and select Visualize Data.

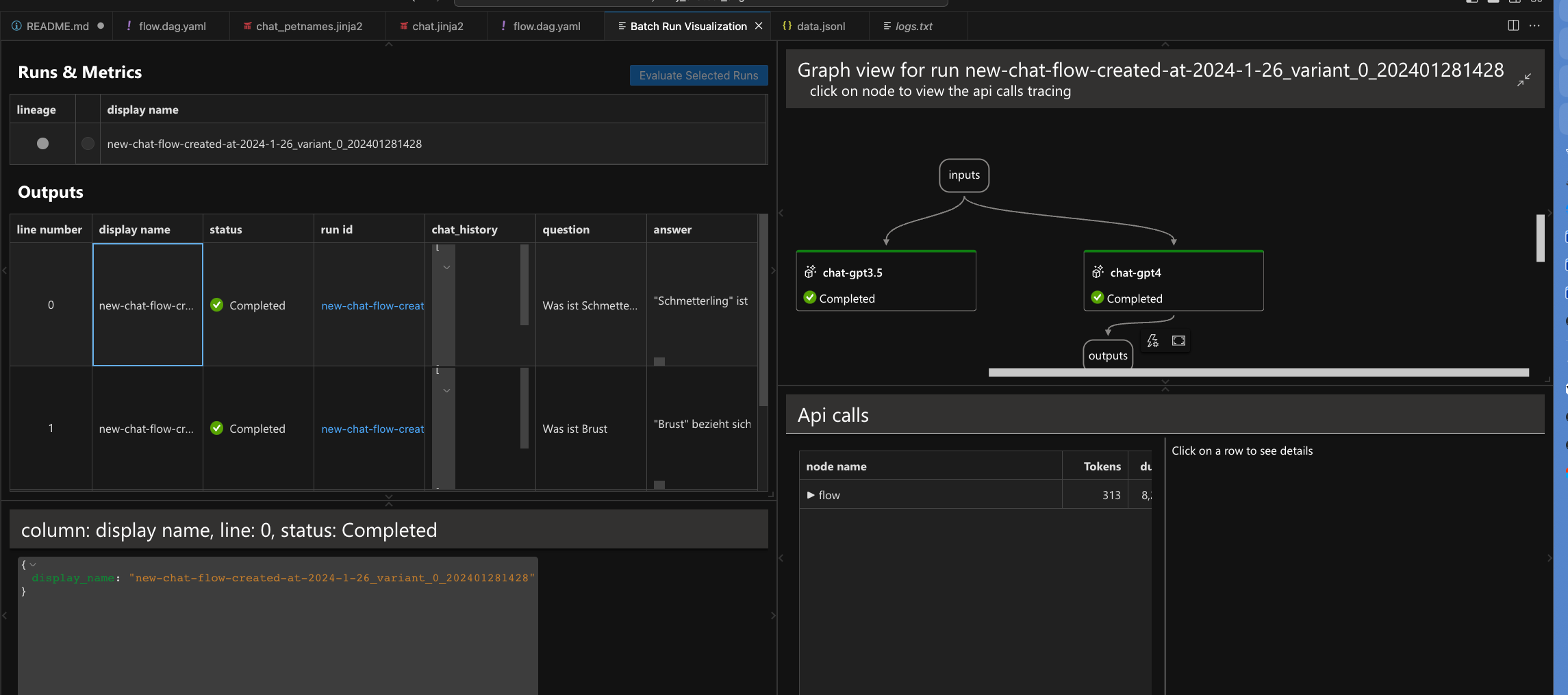

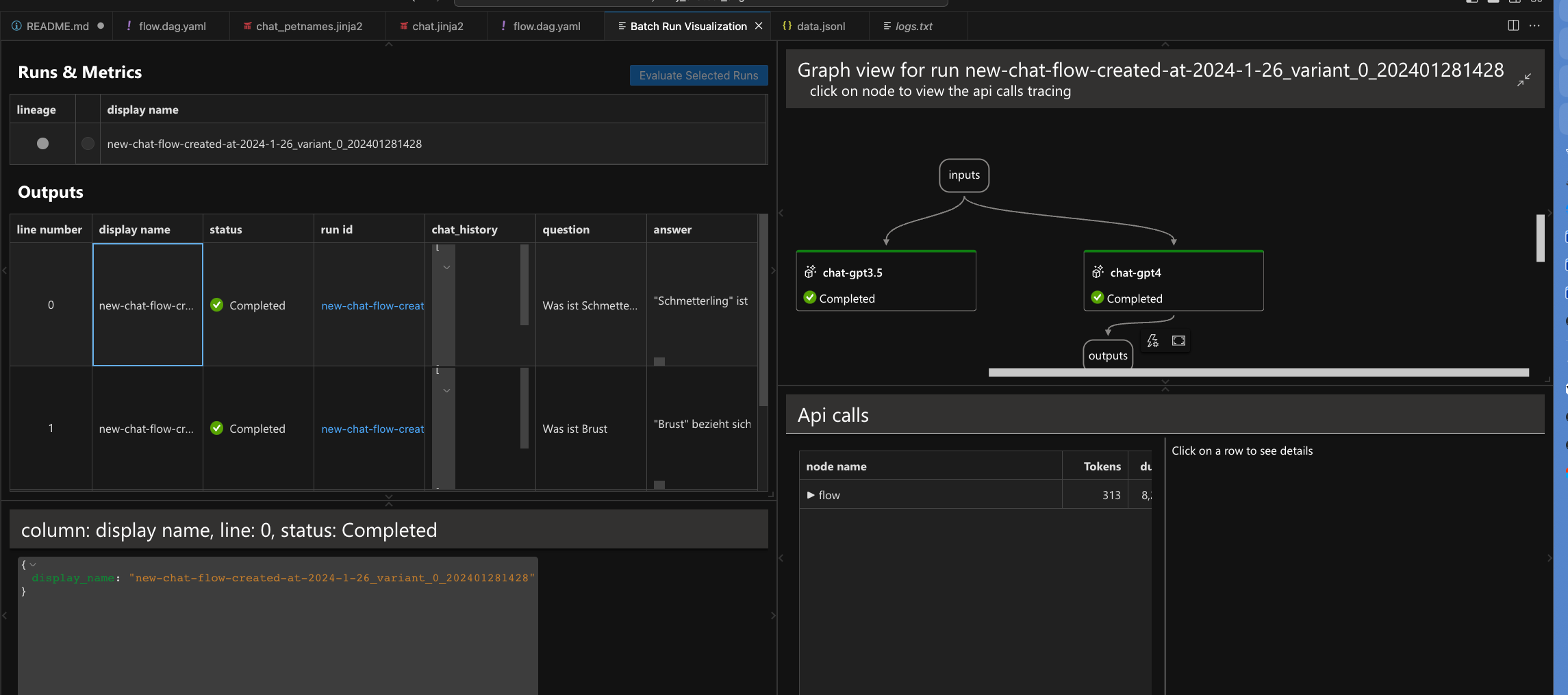

A new tab “Batch Run Visualization” opens with the evaluation.

However, I find the way via the UI quite confusing, so I’ll show you the same thing again via CLI. So back to the point you remembered ;-)

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

|

> pf run create --flow . --data ./data.jsonl --column-mapping question='${data.question}' --stream

2024-01-28 14:39:29 +0100 32795 execution.bulk INFO Current system's available memory is 73954.921875MB, memory consumption of current process is 173.546875MB, estimated available worker count is 73954.921875/173.546875 = 426

2024-01-28 14:39:29 +0100 32795 execution.bulk INFO Set process count to 3 by taking the minimum value among the factors of {'default_worker_count': 4, 'row_count': 3, 'estimated_worker_count_based_on_memory_usage': 426}.

2024-01-28 14:39:29 +0100 32795 execution.bulk INFO Process name(SpawnProcess-2)-Process id(32809)-Line number(0) start execution.

2024-01-28 14:39:29 +0100 32795 execution.bulk INFO Process name(SpawnProcess-3)-Process id(32810)-Line number(1) start execution.

2024-01-28 14:39:29 +0100 32795 execution.bulk INFO Process name(SpawnProcess-4)-Process id(32811)-Line number(2) start execution.

2024-01-28 14:39:35 +0100 32795 execution.bulk INFO Process name(SpawnProcess-2)-Process id(32809)-Line number(0) completed.

2024-01-28 14:39:35 +0100 32795 execution.bulk INFO Finished 1 / 3 lines.

2024-01-28 14:39:35 +0100 32795 execution.bulk INFO Average execution time for completed lines: 6.03 seconds. Estimated time for incomplete lines: 12.06 seconds.

2024-01-28 14:39:38 +0100 32795 execution.bulk INFO Process name(SpawnProcess-4)-Process id(32811)-Line number(2) completed.

2024-01-28 14:39:38 +0100 32795 execution.bulk INFO Finished 2 / 3 lines.

2024-01-28 14:39:38 +0100 32795 execution.bulk INFO Average execution time for completed lines: 4.52 seconds. Estimated time for incomplete lines: 4.52 seconds.

2024-01-28 14:39:43 +0100 32795 execution.bulk INFO Process name(SpawnProcess-3)-Process id(32810)-Line number(1) completed.

2024-01-28 14:39:43 +0100 32795 execution.bulk INFO Finished 3 / 3 lines.

2024-01-28 14:39:43 +0100 32795 execution.bulk INFO Average execution time for completed lines: 4.68 seconds. Estimated time for incomplete lines: 0.0 seconds.

======= Run Summary =======

Run name: "new_chat_flow_created_at_2024_1_26_variant_0_20240128_143928_839454"

Run status: "Completed"

Start time: "2024-01-28 14:39:28.839431"

Duration: "0:00:17.079674"

Output path: "/Users/swelsch/.promptflow/.runs/new_chat_flow_created_at_2024_1_26_variant_0_20240128_143928_839454"

{

"name": "new_chat_flow_created_at_2024_1_26_variant_0_20240128_143928_839454",

"created_on": "2024-01-28T14:39:28.839431",

"status": "Completed",

"display_name": "new_chat_flow_created_at_2024_1_26_variant_0_20240128_143928_839454",

"description": null,

"tags": null,

"properties": {

"flow_path": "/Users/swelsch/Development/b-nova/github.com/b-nova-techhub/chatbot/my_chatbot_origin/new-chat-flow-created_at_2024-1-26",

"output_path": "/Users/swelsch/.promptflow/.runs/new_chat_flow_created_at_2024_1_26_variant_0_20240128_143928_839454",

"system_metrics": {

"total_tokens": 869,

"prompt_tokens": 158,

"completion_tokens": 711,

"duration": 15.282229

}

},

"flow_name": "new-chat-flow-created-at_2024-1_26",

"data": "/Users/swelsch/Development/b-nova/github.com/b-nova-techhub/chatbot/my_chatbot_origin/new-chat-flow-created_at_2024-1_26/data.jsonl",

"output": "/Users/swelsch/.promptflow/.runs/new_chat_flow_created_at_2024_1_26_variant_0_20240128_143928_839454/flow_outputs"

}

> pf run show-details --name new_chat_flow_created_at_2024_1_26_variant_0_20240128_143701_885712

|

Now we can look at the result of the run in detail:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

> pf run show-details --name new_chat_flow_created_at_2024_1_26_variant_0_20240128_143928_839454

+----+-------------------+----------------------+-----------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| | inputs.question | inputs.line_number | inputs.chat_history | outputs.answer |

+====+===================+======================+=======================+===============================================================================================================================================================================+

| 0 | Was ist | 0 | [] | "Schmetterling" is the German word for the swimming style known as "Butterfly" in English. It is one of the four main swimming styles alongside freestyle, backstroke, and |

| | Schmetterling? | | | breaststroke. It is considered the most physically demanding and technically challenging swimming style. In butterfly swimming, both arms are brought forward over the water |

| | | | | simultaneously, and both legs perform a dolphin-like movement at the same time. |

+----+-------------------+----------------------+-----------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| 1 | Was ist Brust | 1 | [] | "Brust" is a swimming technique also known as breaststroke. It is one of the four main types of competitive swimming, alongside backstroke, butterfly, and freestyle. In |

| | | | | breaststroke, the movements of the arms and legs are symmetrical, with the arms extended in front of the body and then brought out to the sides up to the shoulders. The leg |

| | | | | movements are similar to the frog style. It is known to be one of the slower swimming techniques, but it is often preferred for its stability and the fact that the swimmer |

| | | | | can keep their head above water. |

+----+-------------------+----------------------+-----------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| 2 | Was is Rücken? | 2 | [] | "Rücken" is the German word for "Back", and in the context of swimming, it refers to backstroke. Backstroke is a swimming technique in which the swimmer swims on their back |

| | | | | with their face up. It is one of the four main stroke styles in competitive swimming, alongside freestyle, breaststroke, and butterfly. It is also a good style for beginners |

| | | | | as the head is always above water, making it easier to breathe. |

+----+-------------------+----------------------+-----------------------+-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|

I find the CLI version faster and more intuitive to work with and try out than the Visual Studio Code plugin.

This was a first overview of how to get started with Prompt Engineering using PromptFlow. Of course, it goes much further than what I showed you today in the first Techup on the topic.

For example, you can create multiple nodes to test different configurations and different models for a specific prompt. You can also have an accuracy created to see how much the expected answer matches the actual answer.

All of these topics are very interesting and will certainly be presented in detail in a future Techup. I will definitely keep an eye on the topic and directly integrate this framework into new projects. 💡

This techup has been translated automatically by Gemini