Last year I once presented the idea that WebAssembly could be perceived as a technology that, by using OS-agnostic machine code and providing a quasi-ubiquitous runtime, creates the possibility of offering containerisation at a high level of abstraction. Of course, the original idea was to run WebAssembly in the browser as a way of extending the web standard. The idea was that the entire client-side logic does not have to be executed in a JavaScript engine such as V8, but that another WebAssembly-capable runtime is available in which application code runs in a galvanically isolated sandbox.

This is of course an interesting development in this day and age. This path has already been taken by other pioneers. Not least the development of the Java Virtual Machine, better known by its acronym JVM, was an attempt to translate programmatic code into machine code remote from the OS and have this substrate, the bytecode, run in an encapsulated, isolated runtime. Despite the massive success of Java as a language over decades, the JVM has nevertheless failed to solidify a standard for a sandbox in which code can be freely interpreted at will. The idea of using a flawless sandbox, however, was discovered by Docker at the latest and has managed to give sandboxing new momentum. Today, you can hardly get the word containerisation out of everyone’s mouth in the tech world.

Now we are getting a little ahead of ourselves; if we take this containerisation idea further, we can well imagine a future in which the focus of containerisation with Docker could move more in the direction of WebAssembly.

The idea of abstracting everything outside the actual application logic so that the developer can put all his focus on the development, true to the Developer-Experience aspect, is not new after all. With serverless platforms, there is already the approach of expanding FaaS in such a way that the developer has as little contact as possible with the infrastructure and the CI/CD process. Worth mentioning here are certainly Serverless, Serverless-Stack, or also Architect. The innovative thing about implementing a serverless architecture with WebAssembly runtimes is the fact that the logic modules are compiled with a transparent standard and made to run in a standardised sandbox. While AWS’s Firecracker MicroVM certainly provides a robust and efficient foundation for AWS Lamdba or AWS Fargate (it’s written in Rust, after all), a WebAssembly module also allows it to run on a wide variety of environments, meaning that the portability of a WebAssembly module allows the workload to be played out on devices at the edge (or anywhere else).

Before we get into the actual introduction to the art of WebAssembly in the cloud using wasmCloud, it would certainly be appropriate to have some prior knowledge of various technologies. Fortunately, with a little time, you can read about all the relevant technologies in our TechUp’s. The following TechUp’s tie in with today’s topic:

- Getting Started with WebAssembly

- Containerisation with WebAssembly

- Getting Started with Rust

- Workload Orchestration with HashiCorp Nomad

- Getting started and understanding about Elixir and OTP

Cloud-to-edge WebAssembly-enabled host runtime with wasmCloud.

If we remember correctly, in the Containerless with WebAssembly Runtimes-TechUp, I described how to use a WebAssembly runtime like Wasmer that can run WebAssembly modules on all sorts of computing devices.

Liam Randall and Stuart Harris gave an insightful interview in November 2021, in which the two founders of Cosmonic succinctly summarise wasmCloud and also give a glimpse into the future. There they contextualise wasmCloud as the next level of abstraction in a decades-long trend away from the machine towards the application domain (called “business logic” in the reference below).

“Wasmcloud is a higher level abstraction for the cloud that uses WebAssembly to run everywhere securely. Virtual machines abstract away from specific hardware, containers abstract away from a Linux environment, and wasmCloud abstracts away from the specific capabilities you use to build an application. wasmCloud continues the trend of increasing abstractions for building and deploying software in the cloud. Kubernetes lets an organization run their containers in any cloud environment, and wasmCloud lets a developer run their business logic on any capabilities, in any cloud or edge environment.” - Liam Randall and Stuart Harris, Source: jaxenter.com (04/04/2022)

If you have already come into contact with application containerisation and sandboxing, you know that the containerisation landscape is very broad and that the idea of containerisation can no longer be equated with Docker. In a nutshell, a classic container runtime like containerd or cri-o abstracts the operating system kernel. For this reason, it is also called OS-level virtualisation, which, unlike hardware virtualisation such as XEN or VMWare vSphere, does not put an entire operating system on top of the host operating system, but only abstracts the user space (which lies above the kernel land).

Similar to the Java Virtual Machine, a WASM runtime provides a runtime environment that only executes the application code and addresses other components of the operating system via the Virtual Machine/Runtime if necessary. Thus everything is encapsulated in an isolated sandbox. Virtualisation is always about isolating a domain in a sandbox that is clearly defined in advance. In the meantime, there is also a trend back to OS-related containerisation, such as Firecracker, Kata or gVisor (these can be roughly grouped under micro-VMs).

WasmCloud goes one step further than a JVM in that all the connections to the runtime environment are to be abstracted by means of so-called capability providers. In this way, I/O requirements are programmed once in standardised interfaces and addressed via the WASM module, if rolled out and executable. This reduces the side effects of a given WASM module and also makes it easier to test.

WasmCloud is first and foremost a host runtime

Perhaps host runtime is already too specific a term. You can understand wasmCloud more succinctly as a Distributed Application Framework for Microservices.

WasmCloud comes with a certain tech stack and incorporates certain “best-of-breeds” (the best tool in each case) in order to pack its intended objective into a technically functional and stable product. In the interview mentioned above, the advantages of the technologies used are also described.

“The entire wasmCloud team and project is built with best-of-breed open source projects, including Nats, Elixir/OTP and the Smithy project from AWS. Nats, from Synadia, has emerged as the internet’s dialtone - and in wasmCloud we use this to seamlessly connect wasmCloud hosts at the application layer. Elixir/OTP has over the last 20 years become the default way to scale to millions of processes and we adopted it on our backend in order to offer that incredible approach to our community. And while Smithy is a new project, it has been used successfully within AWS to model and design hundreds of APIs.” - Liam Randall and Stuart Harris, Source: jaxenter.com (04/04/2022)

The best-of-breed software technologies that wasmCloud uses include the following products:

- WASM and its own WASM runtime as a container and container runtime.

- Elixir/OTP as a kind of WASM module orchestration mechanism. The OTP-based orchestration is to be understood as a host runtime and forms the core of the wasmCloud project. There was a custom-written Rust-based host environment in the original version, but this has been replaced entirely by OTP.

- NATS as API and Service Mesh between the individual application domains. WasmCloud uses a specially designed lattice to achieve a flat topology of the service mesh.

- Smithy as a declarative API for the WASM-based services.

WasmCloud uses a specific nomenclature to designate all subcomponents in a wasmCloud host-based cluster. These subcomponents are therefore subject to a certain definition, which we will briefly look at here:

- host runtime: That which is rolled out on the OTP nodes. Originally in Rust, now in Erlang/OTP.

- Capability Provider: Cross-domain functionality that a given actor can invoke.

- Interfaces: The functionalities and operations that a capability provider supports are defined in an interface. Smithy is used for this purpose.

- Actor: Instance of a WebAssembly module that contains the business logic. Actors are scaled horizontally via replication. WasmCloud actors are also OTP actors at the same time, since OTP perceives the instantiation of the WebAssembly module as a process and is therefore already “scheduled” accordingly.

- Application: A large number of actors together form an application. See https://github.com/wasmcloud/examples/tree/main/petclinic.

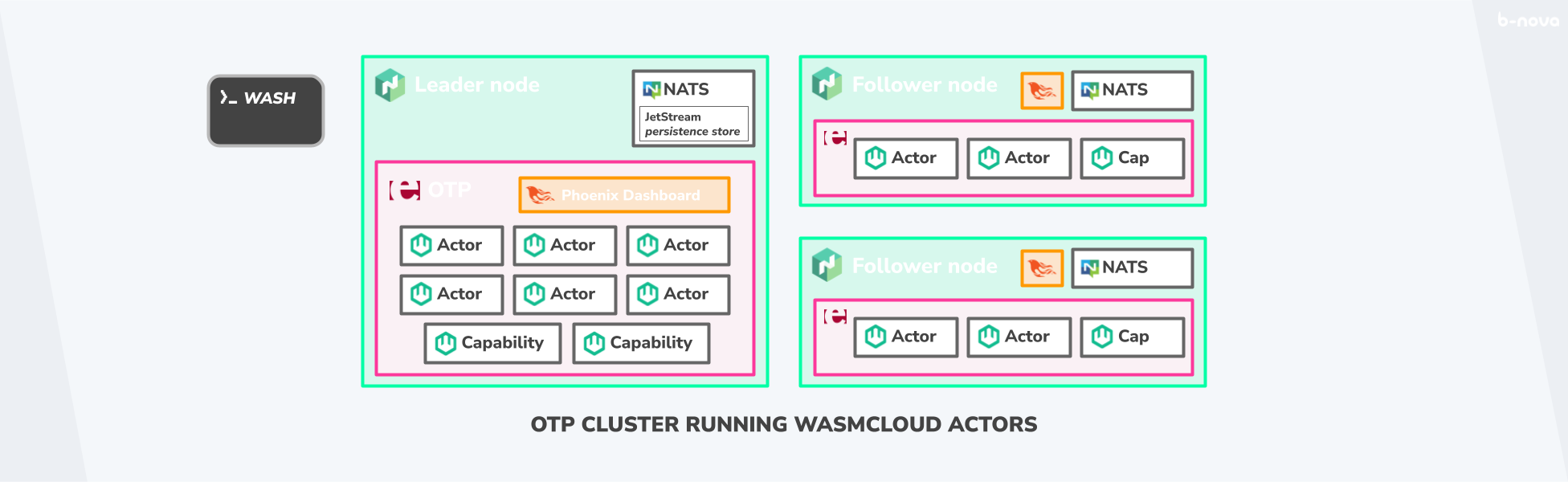

The overall architecture of a classic cluster instantiation could look like the one shown below. Since the OTP host only really gets going on multiple machines and can only be called highly-available with distributed architecture, there are official HashiCorpNomad playbooks that can be used to roll out wasmCloud OTP hosts quickly and easily.

In addition to the actual business logic units, the Actors, there are also so-called Capability Providers, which provide basic functionalities across applications. To get an idea of what such a capability provider could typically provide as cross-application functionality, I have compiled a small list of all officially maintained providers:

- httpserver: HTTP web server built with Rust and warp/hyper.

- httpclient: HTTP client built in Rust.

- redis: Redis-backed key-value implementation.

- nats: NATS-based message broker.

- lattice-controller: Lattice Controller interface.

- postgres: Postgres-based SQL database capability provider.

- numbergen (built-in): Number generator, including random numbers and GUID strings.

- logging (built-in): Basic level categorised text logging capability.

So much for the theory. Now let’s jump into the practical part and take a look at a wasmCloud cluster and how to get one running on your local machine. 🤠

Hands-On with a local host runtime

The upcoming hands-on has the same objective, except for a few minor details, namely to set up a wasmCloud-based host environment, and has the same flow as the official Getting Started guide.

Since wasmCloud is still in a very early, one can already speak of a pre-alpha phase, development phase, it may well be that at the time of your call to the Getting Started guide, its content and description has already changed or at least slightly adapted. For this reason, I provide all the necessary manifests, commands and snippets here, so that you can still go through the guide in its original version. The Docker images used are versioned, so the scenario can still be recreated 1:1 later.

As already briefly mentioned, the starting point is that we will set up a host environment together on your local machine, on which we will then roll out and call a small function (a wasmCloud Actor).

Preparations

For setting up the host environment, we don’t use Nomad to serve our target machines now, since we only have one machine available anyway, namely your local machine. For this reason, we use containerised Docker images for the subcomponents. On top of that, we use wasmCloud’s own wash, the wasmCloud shell.

As always, with such technical step-by-step guides, it is an advantage to have some UNIX and shell basics. It makes understanding the individual steps much easier, although little “magic” should be used in this hands-on. In summary, the following 3 points should be taken as preparation:

- Docker

- Wash

- UNIX and shell knowledge

Installing wash is very easy on GNU/Linux and Mac OS. For Mac OS you can add the third party repository of wasmCloud wasmcloud/wasmcloud to the package manager brew as usual via brew tap. Then we install wash with brew install.

|

|

If you use GNU/Linux, a BSD variant or Windows, you can simply install the CLI via the Rust environment and its package manager Cargo using cargo install.

|

|

Before we get started; Let me say a quick word about WASM, Container Registry and OCI. OCI is a container standard and an acronym for the Open Container Initiave. This container standard guarantees that we can also containerise non-LXC-capable images and store them on a container registry. OCI thus enables the containerisation of WASM modules.

In order to package finished WASM modules as images and then upload them to a container registry of choice, there is a software solution called wasm-to-oci, which implements exactly this standard.

The WASM images provided by wasmCloud and used here in the hands-on are hosted on the Azure Container Registry. So, now we are ready for our setup. 😁

Setup with Docker Swarm

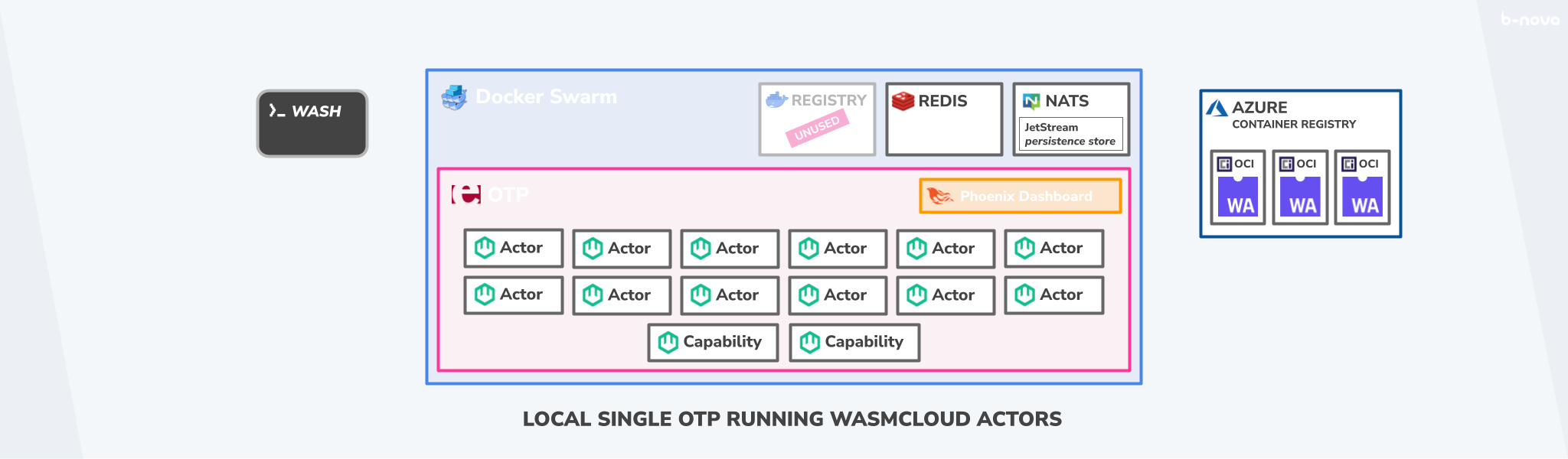

The plan looks like this. There is only one node, namely the local computer. On it, we will instantiate three Docker images with docker compose, which are necessary as subcomponents of the overall platform.

These would be the following three components:

- Redis as KeyValue store

- NATS as asynchronous streaming API

- And last but not least the OTP-based wasmCloud host

For this hands-on, we won’t write our own Actors or Capacity Providers, but will pull WASM images from the Azure Container Registry, where the crew behind wasmCloud has already stored sample modules. The wasmCloud host also provides us with a Phoenix-based dashboard onto which we can map the host. In addition, just like with Kubernetes, with kubectl or with OpenShift with oc and with wash we have the possibility to query and provision the state via CLI.

First things first, let’s jump right in and put this desired target state into action. In the following you will see a (relatively simple) docker-compose.yml file as you know it well.

In it we find our 3 subcomponents entered as services.

nats:2.3redis:6.2wasmcloud:latest

|

|

So far, so good. Now we use docker compose up to start the cluster.

|

|

You can also run the Docker Swarm above with a -d as a daemon, as a background process. If you still want to look at the logs, you can simply use docker logs -f plus service name to output the desired logs.

|

|

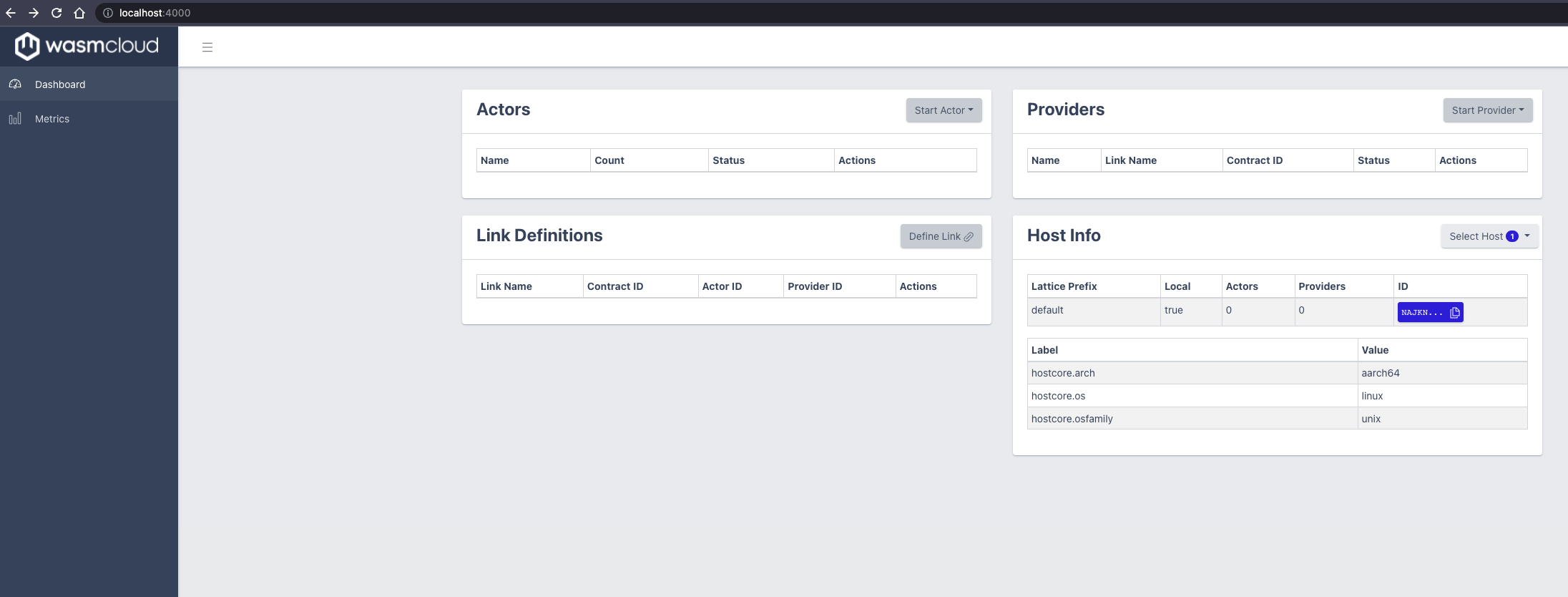

As soon as the 3 services are running you can call the wasmCloud dashboard under localhost:4000 directly in the browser as a first test and also as a first entry point.

As you can see, the dashboard is still very rudimentary and offers little click area for meaningful interaction. But yes, this is forgiven because of the early project status.

Important for the operation of the CLI is the so-called Host-ID . We must have this at hand in order to display or change resources of the target host. Fortunately, this host ID is easy to find out:

|

|

In our case it is the value NAJKNTL43I5EQ7JU22HJ7EVPTCXF3CDMGG7UPSE7D7IJEMS26QUWOMKX, but this will certainly be different for you. Copy it into your clipboard. When calling the status of our inventory via wash ctl get inventory give me the host ID value as the last parameter. And lo and behold. The host inventory seems to be there. wasmCloud recognises my Mac OS as linux (see line with hostcore.os), and there are no actors or providers (yet).

|

|

Of course, we would like to remedy the missing actors and providers, as this is precisely what introduces the business logic that is of interest to us onto our new platform.

These Actor-, or also the Capability Provider-Resource can be managed either via Web-UI or via CLI with wash. We will do this on a trial basis with the web interface. For the very brave, I will give you some wash commands. 🤓

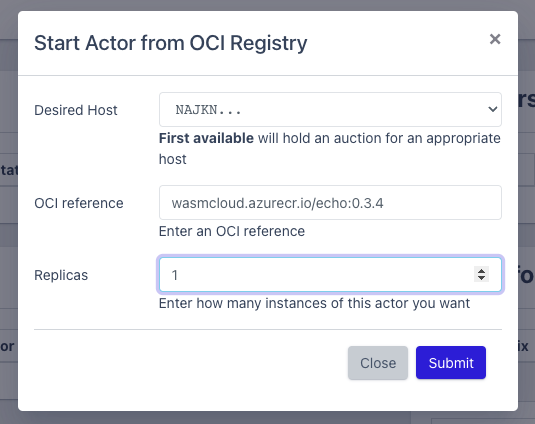

So, let’s create a new actor resource. There are a lot of sample actors on wasmCloud’s own GitHub repository at /examples/actors. For example, there is a Todo actor or a Keyvalue-counter actor, but we will start with a simple Echo actor. Now, fortunately, we don’t have to check out the repo and have the Rust project compiled with WASM as the target, but can reference a pre-built WASM image from the Azure Container Registry. The reference to this is wasmcloud.azurecr.io/echo:0.3.4 . Enter this value in the “Start Actor from OCI Registry” window under OCI reference.

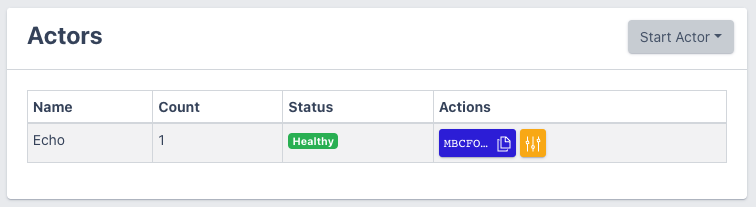

Aha. Something has happened! In the dashboard we now see the actor with the name “Echo”. We can change the number of instances here just like with Kubernetes or other container orchestration solutions, also called replicas. Very nice. This should already look very familiar to those with an affinity for Kubernetes….

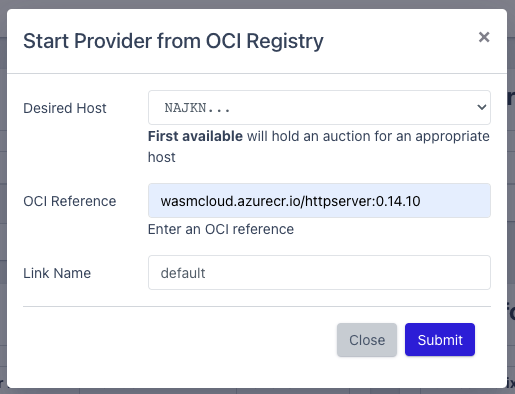

But this is not the end of the story. Although it is not often that capability providers have to be created on a productive wasmCloud host, we still have to do it here initially. Just like with the actor before, we specify a reference to our desired provider. This reference, which we use here wasmcloud.azurecr.io/httpserver:0.14.10, provides us with a provider that has HTTP server functionality. Just leave “Link Name” default.

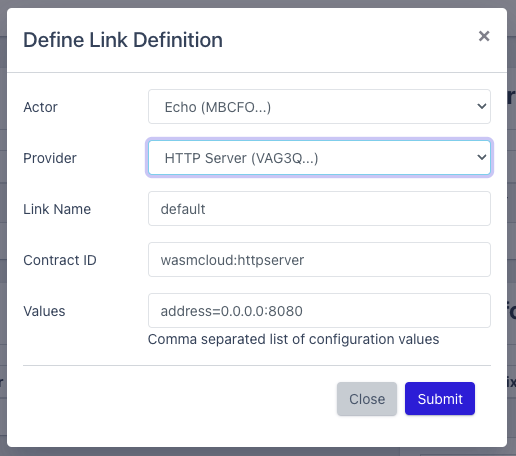

Now our wasmCloud host can perform HTTP server functionality. Now we still need to tell our echo actor that there is such a functionality provider. This announcement is called a Link Definition and can be created in the Dashboard just like the Actor or the Capability Provider. The fields should look like this:

- Actor:

Echo (#ID) - Provider:

HTTP Server (#ID) - Link Name:

default - Contract ID:

wasmcloud:httpserver - Value:

address=0.0.0.0:8080

In case you are not sure, here is another screenshot of the “Define Link Definition” window.

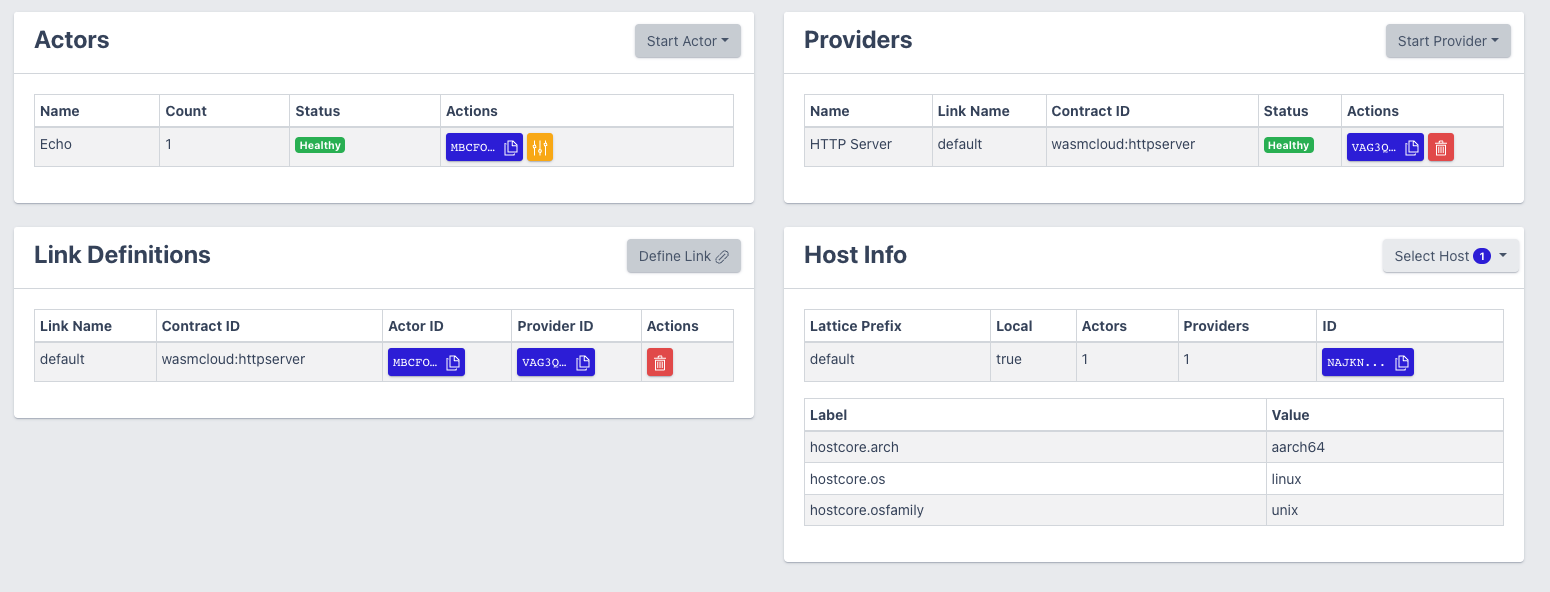

The final dashboard should look like this. There is an Actor “Echo”, there is a Provider “HTTP Server” and a Link Definition “default” which links the Actor to the Provider.

If everything is configured correctly, we can now send an HTTP request to our cluster. The context path here is /echo. We simply do this in the terminal with curl. You can see the response below:

|

|

Congratulations! 🎉

You have arrived in the future. At least a possible future timeline where WebAssembly modules have replaced the classic container in container orchestration.

Prospects

The whole thing is really still very early alpha. So we remain curious to see how wasmCloud develops further! However, there is already a platform that builds on wasmCloud. This is called vino.

Further links and resources

CNCF Welcomes WebAssembly-Based wasmCloud as a Sandbox Project | The New Stack

Brian Sletten (2021) WebAssembly: The Definitive Guide - Safe, Fast, and Portable Code | O’Reilly