The topic of AI is still relevant, and new projects worth taking a look at are constantly emerging. This time we are looking at the project DataLine by Rami Awar. This is an open-source tool that allows any user to analyze and visualize their data. Thanks to the use of LLMs (Language Models), this is possible in natural language, making it useful not only for developers. This allows various data sources to be analyzed easily and without in-depth technical knowledge. Different databases, but also Excel or CSV files can be connected as data sources.

DataLine attaches particular importance to data protection. It is ensured that all data is stored exclusively locally by the user. This makes this tool particularly suitable for companies that do not want to store their data in the cloud.

Use Cases

The ability to connect different data sources and the integration of LLMs to provide a variety of functionalities result in various use cases.

Data Analysis for Non-Technical Users

For example, if you have a database with ASDF data and want to analyze it in more detail, it is usually necessary to write SQL queries. However, there are often people from specialist departments who have only basic or no SQL knowledge. With DataLine, these users can still easily create queries without the need for technical knowledge. A typical query might ask for the average value of a particular dataset. DataLine automatically generates an SQL query and displays the desired data.

Data Analysis for Tech Users

The functionality described above is also extremely helpful for technical users. Complex queries can often be described faster in natural language than they can be formulated in SQL. It can also be helpful to get alternative approaches or new ideas for queries that you may not have considered yet.

Visualization

By simply querying data, it can be prepared in DataLine so that it can be displayed visually. Instead of just displaying boring tables, various diagrams can be generated to present the data in a clear way. Based on the entered queries, DataLine automatically suggests the best forms of visualization. It recognizes whether bar charts, line charts or scatter charts best fit the data and presents the data accordingly, which is particularly helpful for non-technical users. It is then also possible to export these diagrams.

Explanation

If you have different data sources and don’t know exactly what data they contain, DataLine can help you understand the structure and content of the data sources.

Security

Dataline advertises above all with the point of data protection and security. This makes it a solution for companies or users who work with sensitive data and do not want to store it in the cloud.

This is possible because all chats with Dataline are only stored locally on your PC using SQLite. Dataline itself also has no servers, and the homepage is simply made available using static files. Since all processing takes place locally on your own computer, no data has to be transferred.

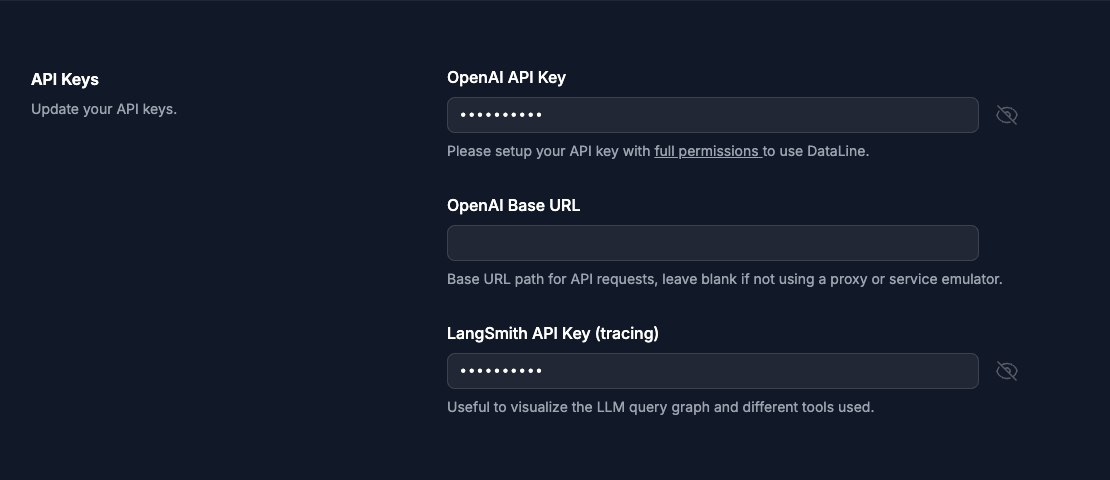

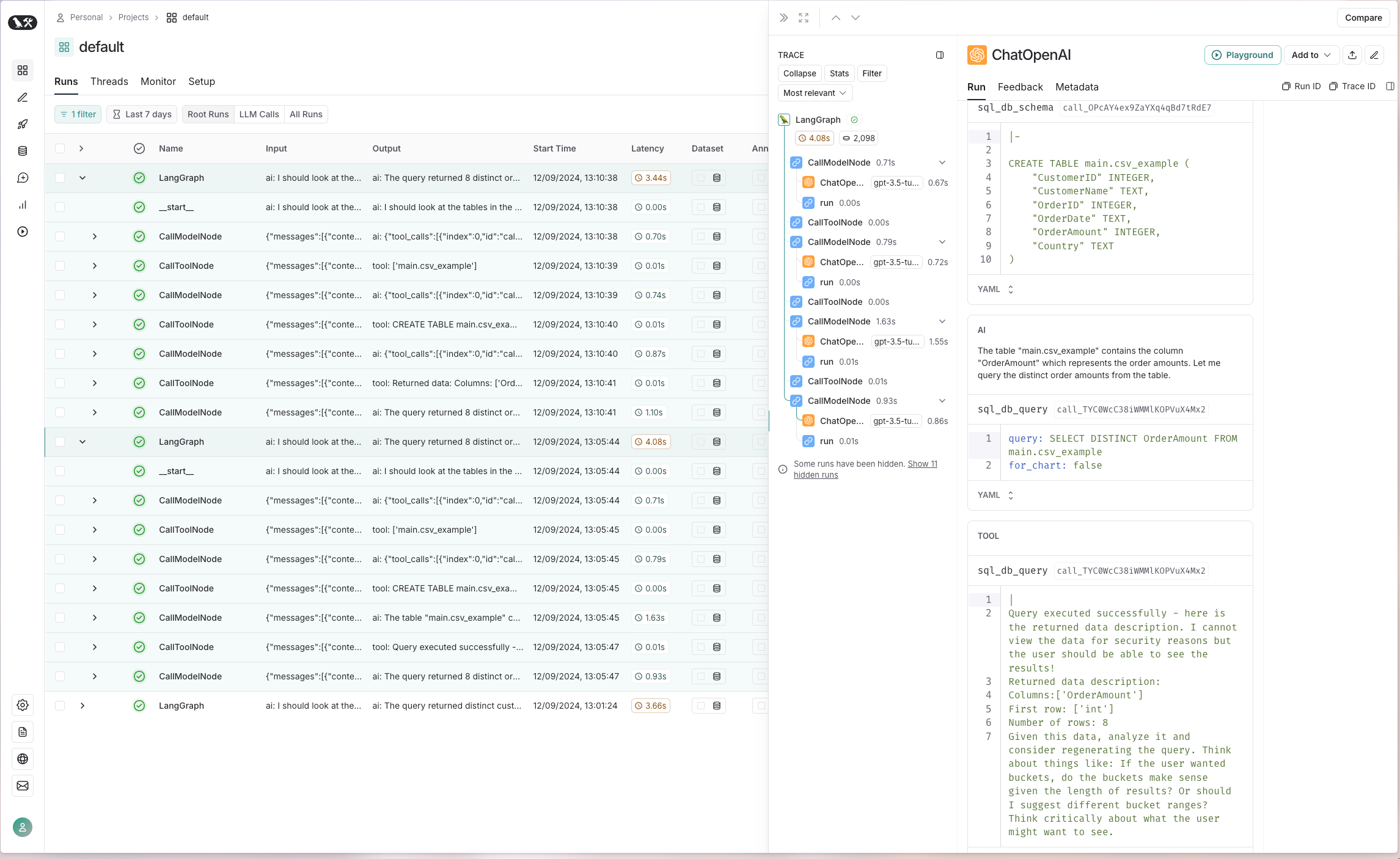

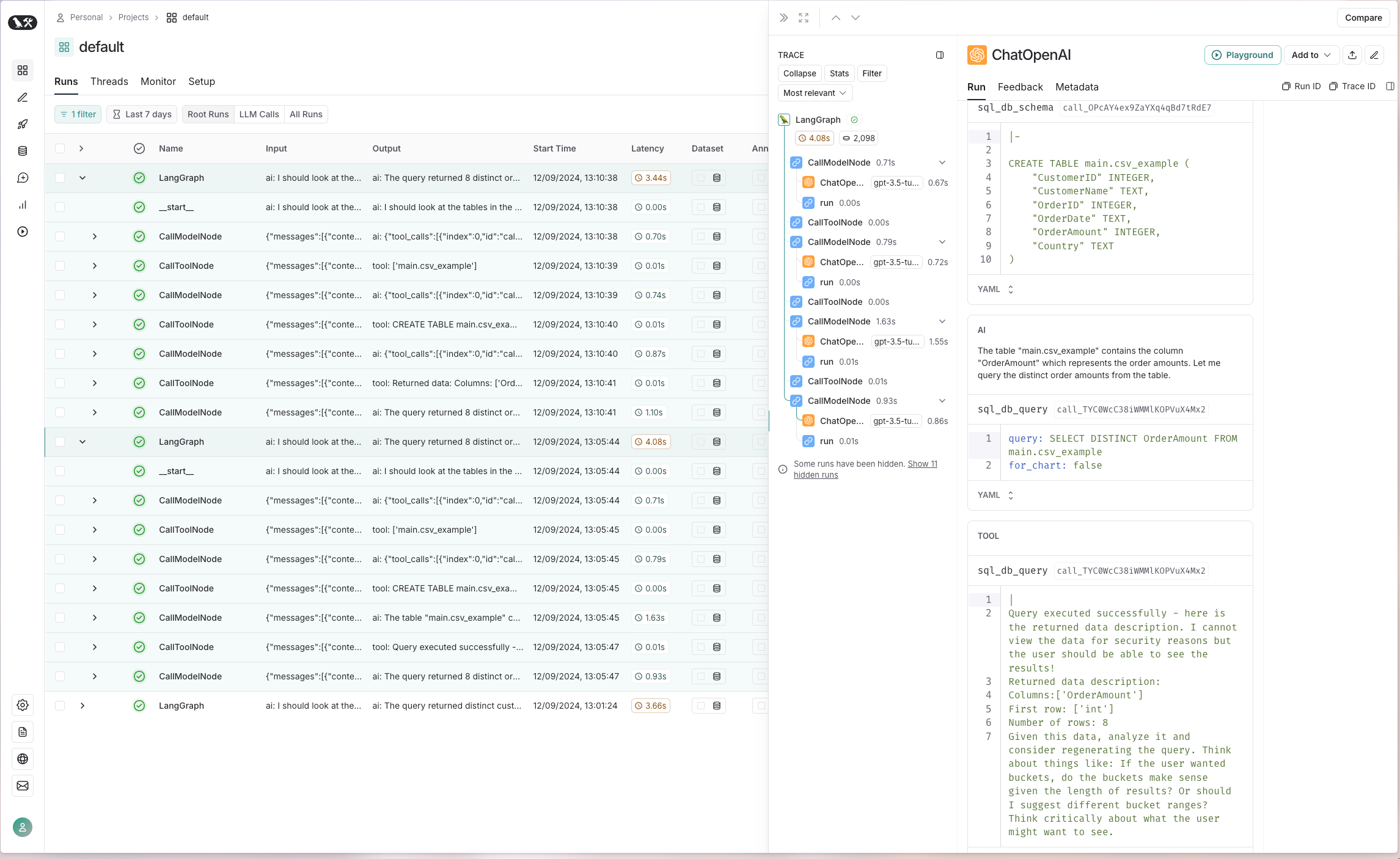

The integration with ChatGPT works by only transmitting metadata such as table names and data types - the actual data remains protected. To investigate this in more detail, you can use LangSmith to analyze the various API calls during a conversation in detail. To do this, you simply need to create an API key at Smith LangChain and store it in the settings:

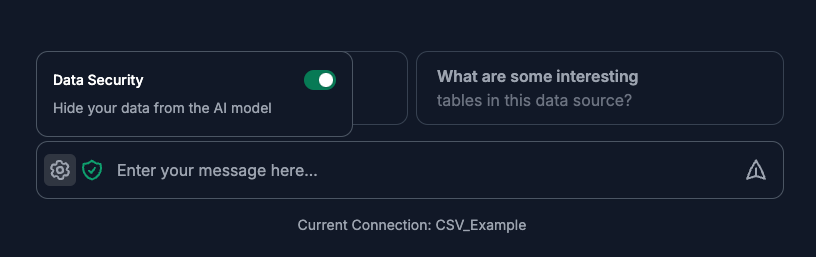

In the message box, you have the option of deactivating or activating the data protection function using the settings symbol. You can tell whether this function is active by the small green security symbol.

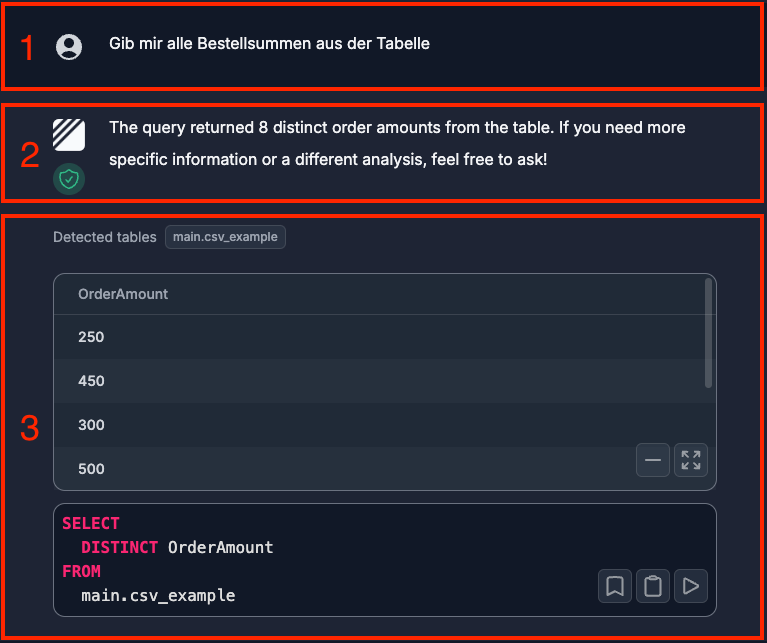

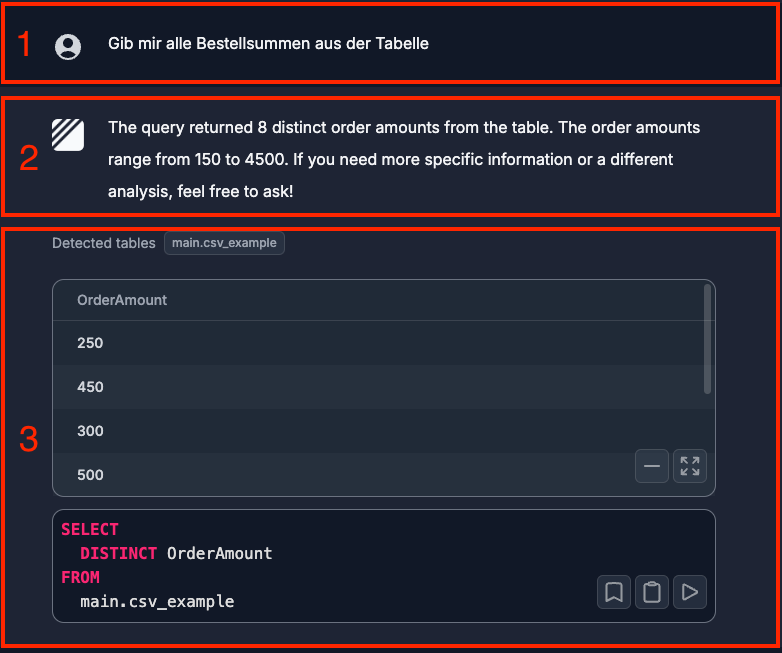

In the first case, we make a request and want to retrieve all available order amounts from the data source. This message can be seen in the first area. In the second area we see the answer that comes back from ChatGPT. Here you can already see that there is no precise information to be seen which comes from the table. You can also see here that the data should be protected.

In the third area, in turn, you can see the result of the provided SQL statement, which is executed against the local SQLite database. This gives you the correct result from the actual data source.

Now of course we want to check this by testing it with Smith LangChain. To do this, we open the corresponding call. There you can see the individual tools that were used. In our case, we are interested in the sql_db_schema and sql_db_query tools. Here we can see that only the information about the table names and data types is transmitted. It is also noted in the second tool that the data may not be displayed.

Now we want to perform the same query with the data protection function deactivated. In the first area we see the same query as before.

In the second section, two important differences are immediately apparent. Firstly, the green data protection symbol is no longer visible, and secondly, the ChatGPT response now reveals data that comes directly from the data source.

The third area is no different in this case, however.

And to be absolutely sure, we check the input for the corresponding tool via Smith LangChain. On the right side we now see that several pieces of data are being transmitted. On the one hand, three complete entries are sent, and on the second tool, all OrderAmount values are transmitted.

Usage / Tip

Edit SQL Statement

Once you have received an SQL statement as a result, you can not only edit it, but also execute it again directly to update the result. This allows for quick adaptation and refinement of queries without having to start the entire process from scratch. This allows users to work iteratively and gradually optimize their queries until they get the desired result.

False Information

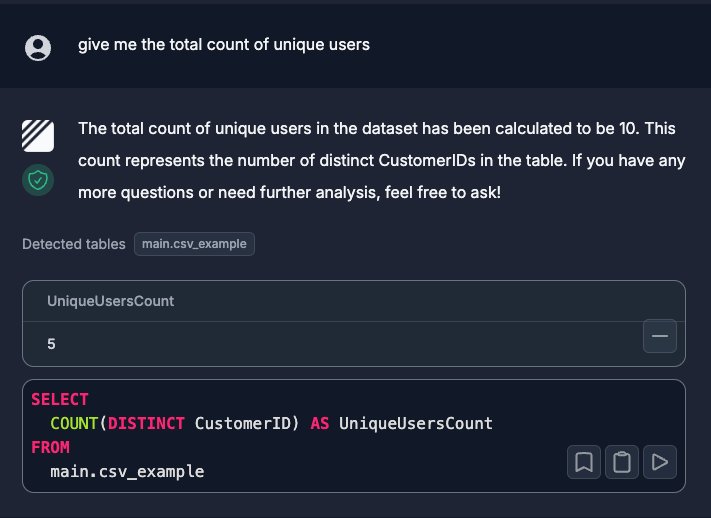

Unfortunately, I have noticed several times that the descriptions in the text are not always correct, even though the SQL statement is correct and the result is displayed correctly.

In this example, we see that five unique CustomerIDs are output as the correct result. However, the text incorrectly states that there are ten.

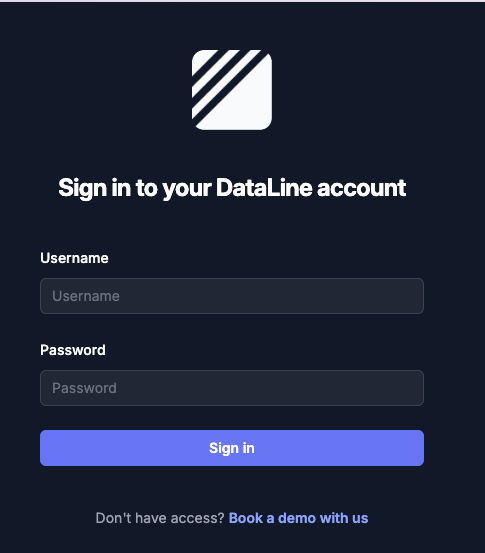

Authentication

To increase security, authentication can be set up in DataLine. This is done by setting environment variables for the username and password. In the example, the variables AUTH_USERNAME and AUTH_PASSWORD are set with the values b-nova and techhub. DataLine is then started, which makes access only possible after successful authentication. This protects access to sensitive data, especially in self-hosted environments.

|

|

Hands-On

Installation and Configuration

To install DataLine via Homebrew, run the following command:

|

|

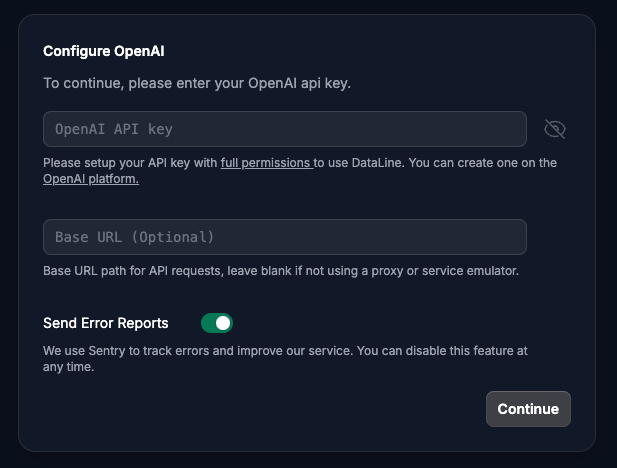

After installation, the server can be started with the command dataline. This will open a new window in the browser where the first step is to insert the API key from OpenAI. This API key can be created on the OpenAI platform.

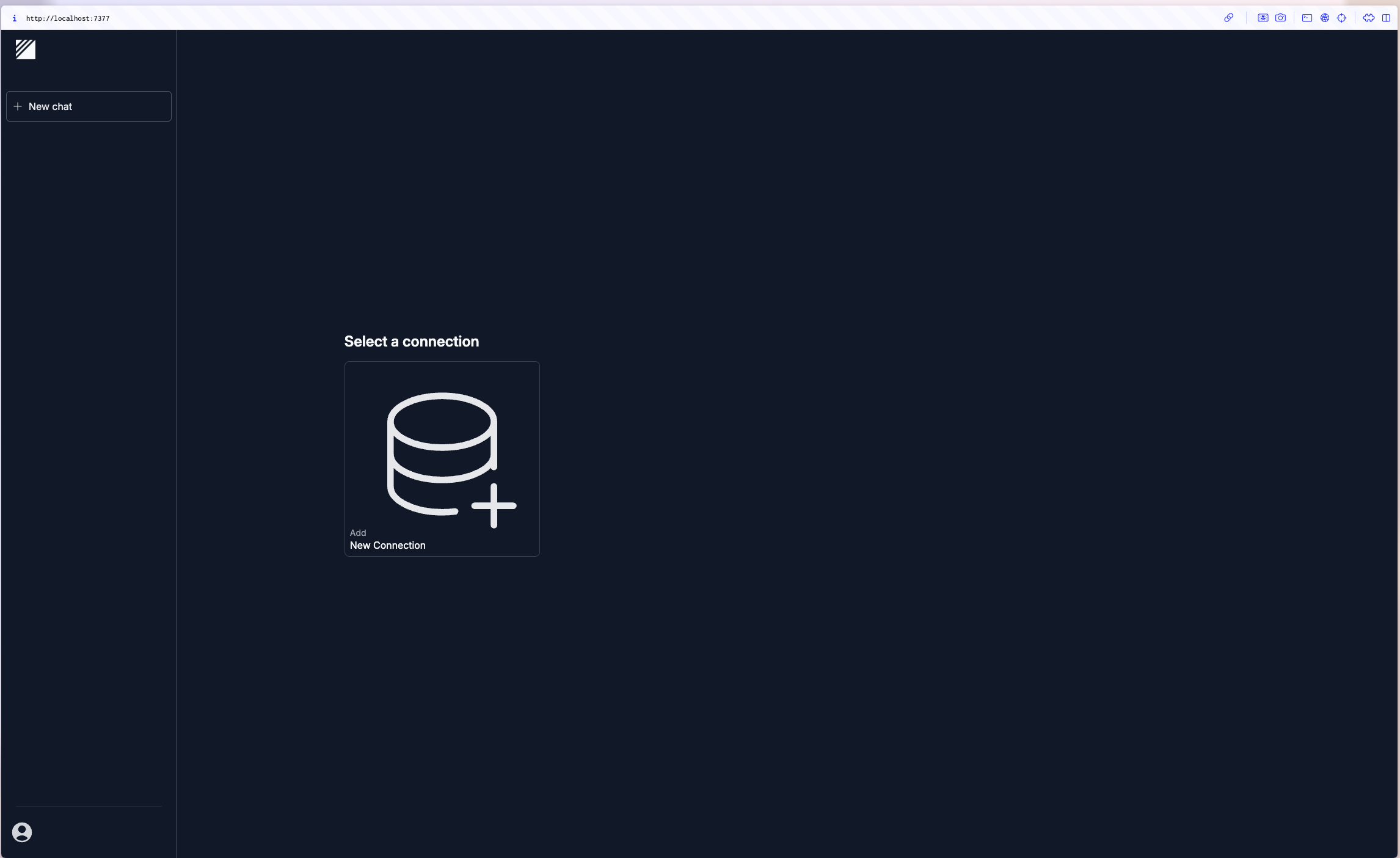

Add Data Sources

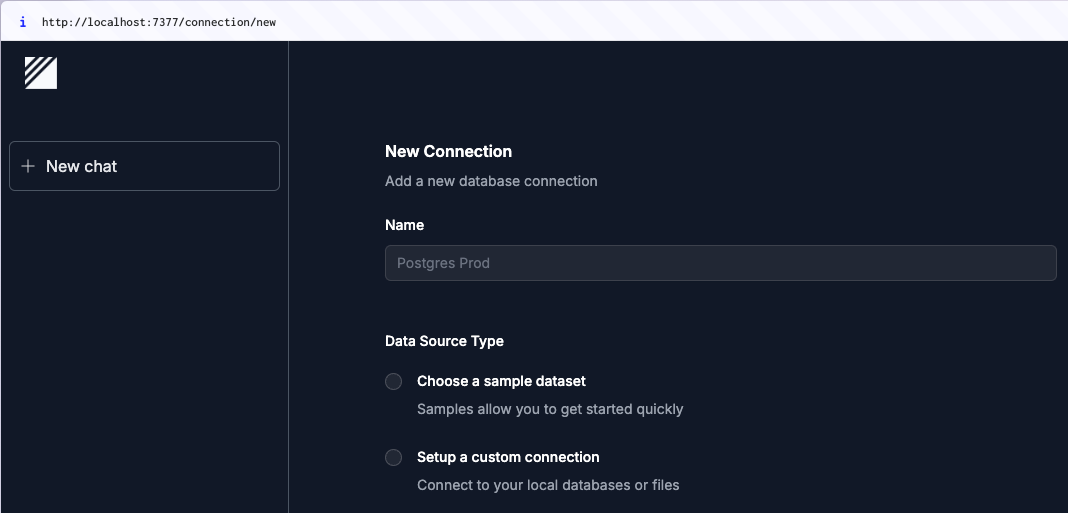

Once this is done successfully, the initial view of DataLine will appear, where you have the option to set up a new data source. Here you can connect different databases or file formats such as CSV or Excel to start the analysis.

Clicking on “Add new Connection” opens a new window where you can define a name for the connection and decide whether you want to use sample data or integrate your own data. For our hands-on part, we’ll start with a simple CSV file. If you select “Setup a custom connection”, you have the option of choosing between different data sources, including databases such as SQLite and files in CSV, Excel or sas7bdat format.

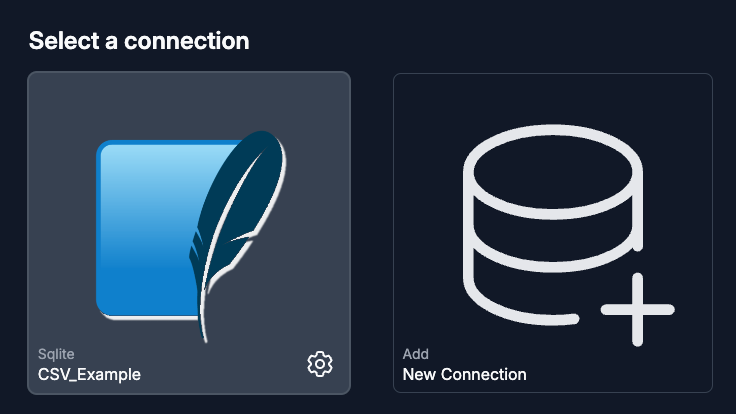

After selecting the CSV file, a drop-down area opens where the file can be dragged and dropped or selected from the file system using the context menu. Once the file has been uploaded successfully, the new connection appears on the start page and the data source is ready for analysis.

If you now take a closer look at the settings, you will see that an SQLite database is created with the uploaded data. This makes it possible to search and analyze the data using SQL statements in the first place.

Analyze CSV File

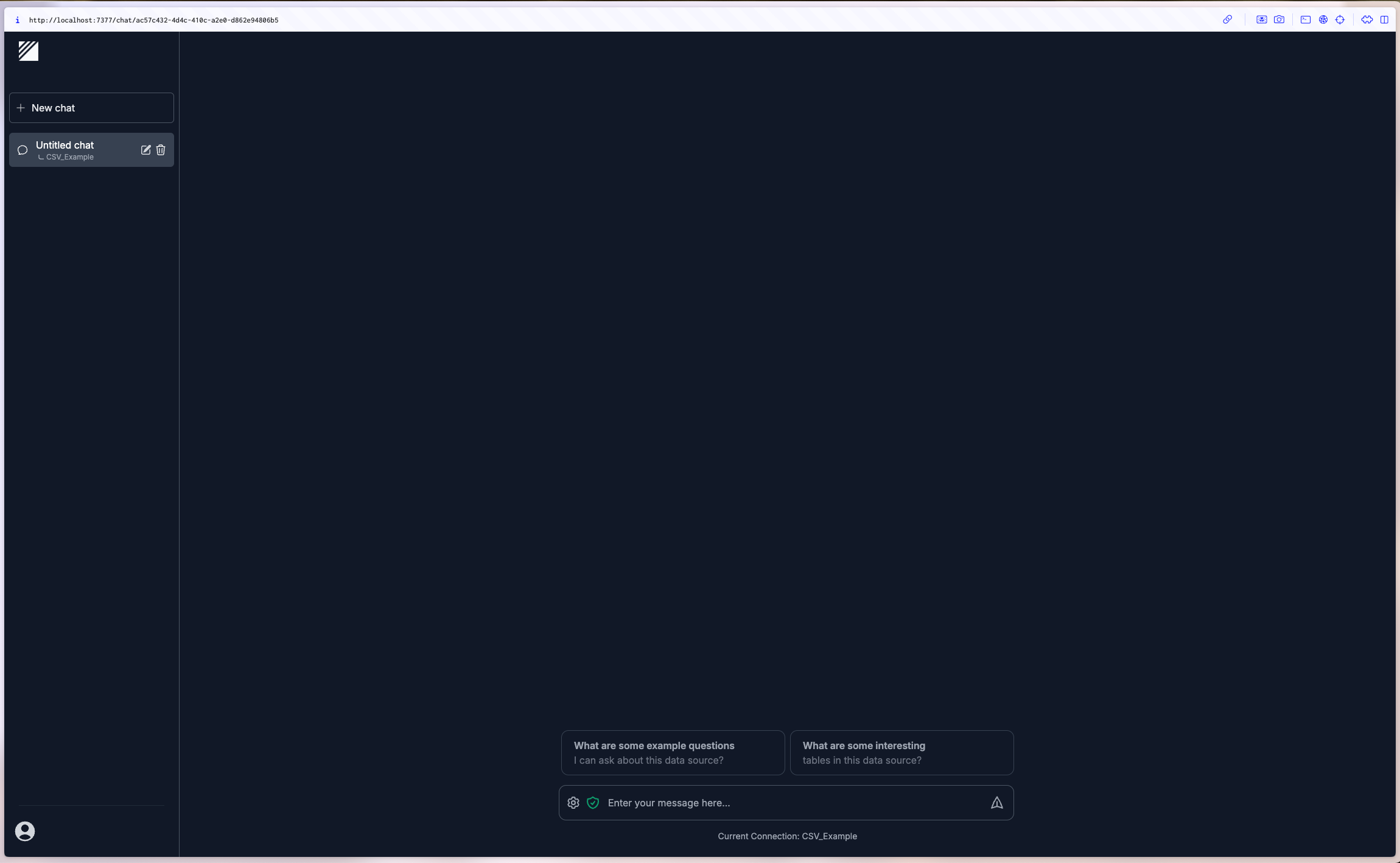

Clicking on the connection opens the typical ChatGPT window, and you can start working with the data source and making queries right away.

This is the content of our sample data file:

|

|

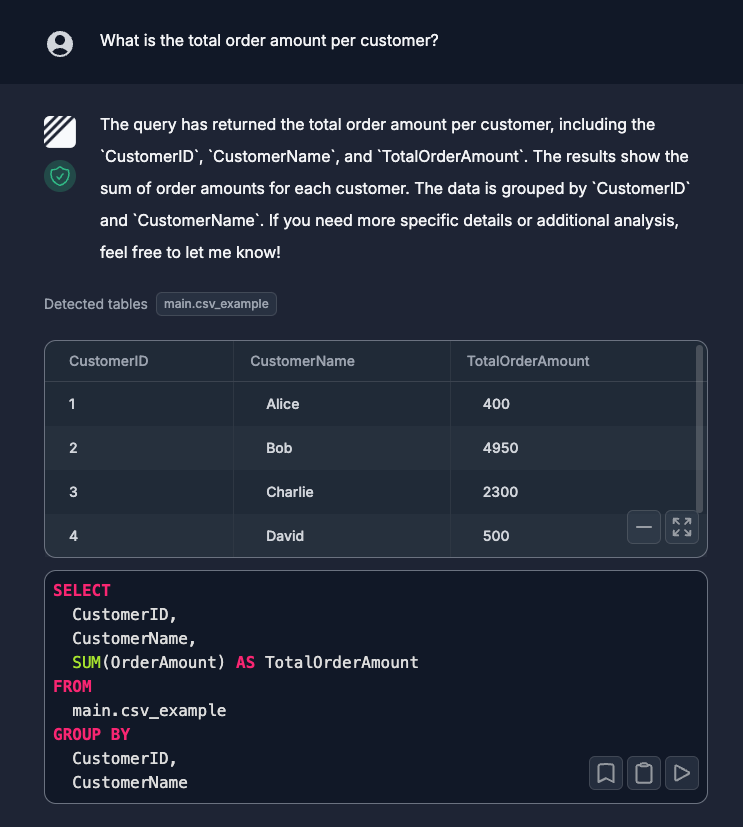

If you now want to determine the total order quantity per customer, you can easily request this in natural language. DataLine displays the result in the form of a table and generates the appropriate SQL statement, which is also displayed. This gives you both the answer and the underlying SQL code quickly.

In the log of the running server you can see the following log:

|

|

Of course, you can also have this visualized. You have the option of displaying this as a bar, line or pie chart.

Analyze Excel File

Even if you have added an Excel file with 10,000 data records as a new connection, the data can be queried in a reasonable time:

Conclusion

DataLine is a promising tool that offers both technical and non-technical users an easy way to analyze and visualize data. What particularly convinced me was the user-friendliness and the fact that it does exactly what it promises. The installation was uncomplicated thanks to Homebrew and completed within a few minutes, so you can get started right away.

It’s particularly impressive how well the natural language queries work, especially in English. This shows the potential of ChatGPT. Support for various data sources such as CSV, Excel and MySQL databases ran smoothly in my tests, and integration was effortless. The speed is also fine for someone who regularly works with ChatGPT.

A big plus of DataLine is the local storage of the data, which is a huge security advantage for companies that do not want to have their data in the cloud. Each user is responsible for protecting their local data, which is particularly relevant for sensitive information.

One limitation to be aware of is the current file size limit of 500MB for local files. This could be improved in future versions, as could support for more data sources. Nevertheless, DataLine offers real added value, especially for users who do not have deep technical SQL knowledge but want to analyze large amounts of data quickly. Tech users also benefit from the automated SQL generation and the ability to manually adjust statements.

Overall, DataLine is a useful tool for data analysis and offers potential for future development. It’s definitely worth a look, especially if you’re looking for quick and easy data analysis without the hassle of programming.

This techup has been translated automatically by Gemini