In the first part of our AWS native series, we want to take a closer look at a very well-known service from Amazon Web Services.

Content Delivery Network

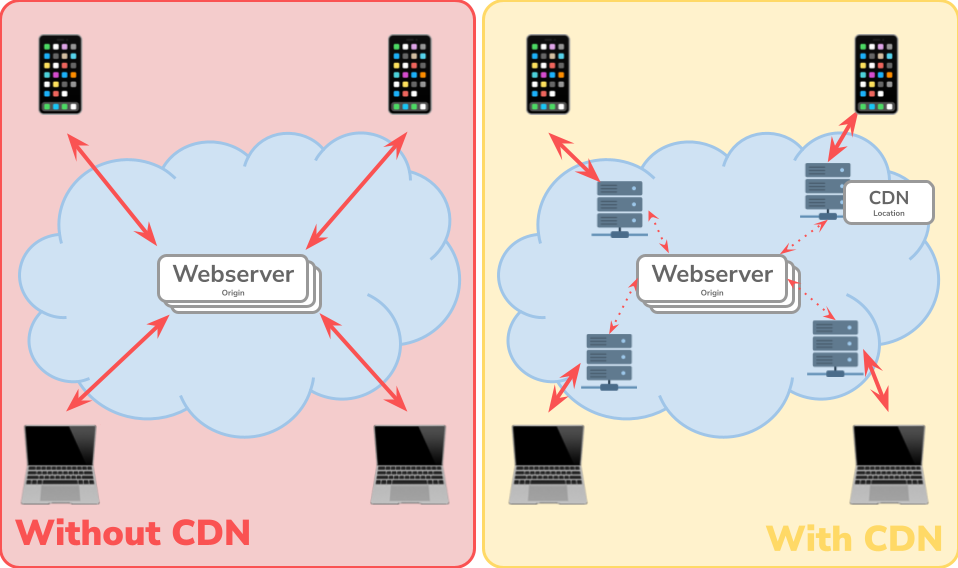

Before we turn our attention to the AWS service, let’s take a brief look at the theory behind a CDN. As the name suggests, this is a geographically distributed network that consists of groups of servers that work together to deliver internet content quickly. The aim here is that relevant resources such as HTML pages, JavaScript files etc. can be loaded with low latency.

At this point I would like to mention that a CDN is not a web host. The actual content is located at a central source, the web host (also called ‘origin’). This can be an AWS S3 bucket, for example. The CDN uses caching to reduce the load on the actual web host. If we are in Basel, for example, and want to call up a website from California, we will receive our response directly from the USA without a CDN. This results in a longer loading time because the physical distance and thus the number of network hops (intermediate step from one network segment to the next) is large. When using a CDN, the request would be automatically forwarded to the next CDN location. In the case of CloudFront, this would be Zurich or Frankfurt, for example. The content was previously cached there and can thus be sent to us much faster.

Technically speaking, the traffic runs via a separate URL, which primarily has nothing to do with your own domain. The best practice here is to register a static path on your own domain and make a redirect to the CDN provider. For example, the customer would see the address https://b-nova.com/static for images, with the path /static automatically forwarding to the address of the CDN provider, e.g. http://d111111abcdef8.cloudfront.net/....

Advantages CDN

- Faster loading times: Due to geographical proximity, content can generally be accessed quickly and with fewer hops.

- Lower web hosting costs: The actual web host is relieved of load

- Increased reliability & stability: Since the content is distributed all over the world.

In addition, a CDN can, for example, protect against DDoS attacks through certain security mechanisms.

CDN disadvantages

- Increased complexity compared to a single web hosting server.

- Less transparency: Since the content is completely distributed externally across the globe.

- Loss of control: Since the content is distributed around the world.

Basically, however, it can be said that in many cases it makes sense to use a CDN, as the advantages clearly outweigh the disadvantages.

CloudFront

CloudFront is the Content Delivery Network (CDN for short) service from AWS. Simply put, it can be used to deliver static and dynamic web content such as HTML, CSS, JavaScript and image files quickly and easily to the user. In doing so, the user always lands on the closest location to them on the global network provided by CloudFront. Specifically, there are over 300 locations in currently more than 90 cities in 47 countries. In this way, Amazon builds up a network of individual nodes in order to always be able to play out content with the lowest possible latency.

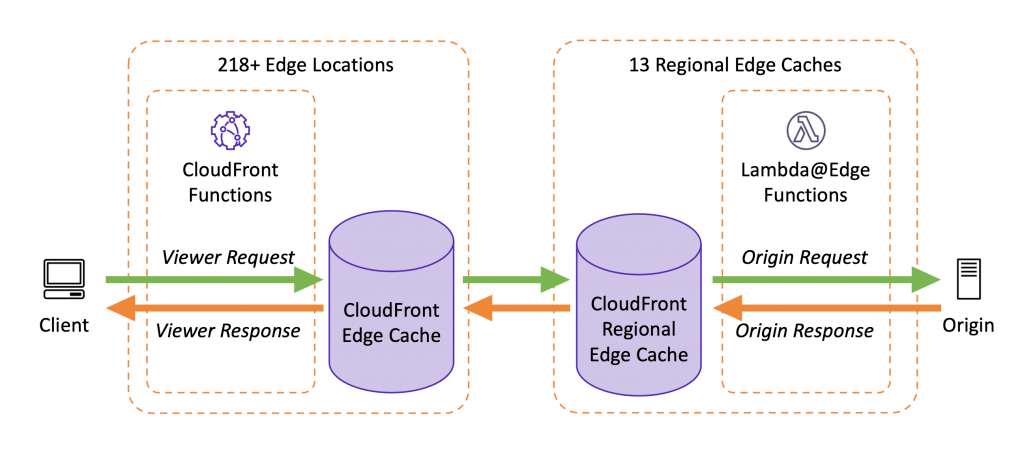

Such individual locations of the global network are called Edge Locations. Often there are several edge locations in a city, for example Frankfurt is currently home to 15 edge locations. In addition to these Edge Locations, there are currently 13 so-called Regional Edge Caches, which are located as upstream caches before the actual Origin. In this way, an edge location can fetch the content directly from the regional edge cache without creating a load on the origin. These Regional Edge Caches thus stand before the Origin, but primarily have no connection to each other (more on this later). In Europe, for example, there are currently three regional edge caches, in Dublin, Frankfurt and London.

Content Delivery with CloudFront

As an example and simplification, let’s take a closer look at the process a request goes through. We are again in Basel and want to query a well-known Cupertino website.

Without CloudFront

- Basel (CH): We submit the request.

- City A: Routing

- City B: Routing

- City C: Routing

- …

- Cupertino (US): Request arrives at the web host, page is rendered and sent back to us the same way.

- …

- City C: Routing

- City B: Routing

- City A: Routing

- Basel (CH): Content is there

With CloudFront, first request:

- Basel (CH): We send the request

- Zurich (CH), AWS Edge location: Content for this request is not yet cached.

- Frankfurt (DE), AWS Regional Edge Cache: Content for this request is not yet cached.

- City B: Routing

- City C: Routing

- …

- Cupertino (US): Request arrives at web host, page is rendered and sent back to us the same way

- …

- Frankfurt (DE), AWS Regional Edge Cache: Response is received and written to cache

- Zurich (CH), AWS Edge location: Reply is received, written to the cache and streamed directly to the user

- Basel (CH): Content is there.

With CloudFront, further requests:

Each further request is much shorter, as the content is already cached in the edge location in Zurich.

- Basel (CH): We send the request.

- Zurich (CH), AWS Edge location: Content for this request is cached.

- Basel (CH): Content is there

If another user were to call up the website in Italy, for example, the request would look like this. 1:

- Bari (I): We submit the request

- Palermo (I), AWS Edge location: Content for this request has not yet been cached.

- Frankfurt (DE), AWS Regional Edge Cache: Content for this request is cached

- Palermo (I), AWS Edge location: Response is received, cached and streamed directly to the user.

- Bari (I): Content is there.

Here you can see that the regional cache has now cached the content (due to previous requests from e.g. Switzerland) and returns it directly. It is clearly visible that, as soon as the content is cached, the latency and the entire process is significantly shorter. By means of one (or more) so-called Cache Policy, it is possible to control exactly which information is part of the cache key and how long the cache duration should be. For example, content can be cached based on the session cookie. AWS also has a clever solution for the first case; as soon as the first byte arrives at the edge location, it is forwarded directly to the user as a kind of stream. This allows, for example, live streams to be viewed via the edge location.

Another possibility that CloudFront offers us are so-called Failover Origins. For example, a Backup S3 Bucket can be configured as a failover origin from which the content is retrieved if the primary origin has a problem. This works completely behind-the-scence, the user is not aware of it. Should this occur, CloudFront’s Real-Time-Logging can be very helpful. In this case, a custom error page from CloudFront could be displayed directly to the customer.

Performance

Now a few words about performance. Of course, the use of a CDN alone should improve performance for both the customer and the internal web hosting, backend and database systems. By constantly caching content, the load is reduced, which speaks in favour of smaller servers, databases, etc.. Thus, money can be saved directly.

CloudFront also offers very detailed and professional options to further improve performance or the Cache Hit Ratio. For example, we can use Origin Shield to ensure that content is queried centrally from a regional edge cache. Let’s assume we roll out a large update for a web-based game with many global users via CloudFront. Since the files are new and many users call the game at the same time, many edge locations are requested, which in turn request their regional edge caches. Since the new updates are not yet available in any regional cache, requests from all 13 current regional caches would go to Origin. With Origin Shield, the regional edge caches can be connected to each other. In this way, only one request goes to the Origin, all other regional edge caches then receive the result back from the central cache.

CloudFront Functions and Lambda@Edge Functions

However, CloudFront is not only limited to its own caching, but also offers the possibility of dynamic data by executing certain functions. This concept is called edge computing, which Raffael already introduced to us in his TechUp Latency-free with Edge Computing.

Before we take a closer look at the two functions, let’s get to know their triggers in more detail. Basically, there are four triggers in the AWS request handling environment to which functions can be bound:

- Request: Trigger on the request.

- Viewer: Executed on every request before the CloudFront cache is checked.

- Origin: Executed on every cache miss before the request is routed to Origin.

- Response: Trigger on the response.

- Viewer: Executed on every response after it comes from the Origin or from the cache.

- Origin: Executed on every cache miss after the response comes from the origin.

Figure: Source: https://aws.amazon.com/de/blogs/aws/introducing-cloudfront-functions-run-your-code-at-the-edge-with-low-latency-at-any-scale/ (25.01.2022)

CloudFront Functions

A CloudFront Function is a serverless function that can be used to execute code at a cloud provider. These functions were released in May 2021 and run on the edge location. It is important to make the distinction here from Lambda@Edge or even normal Lambda functions. A CloudFront Function is as close as possible to the user in an Edge Location and has a maximum execution time of less than one millisecond(!). Because a CloudFront Function runs so close to the user, there are certain limitations. For example, the function has no network or filesystem access. Also important to mention is that in a CloudFront Function you have no access to the actual request body. In addition, they are very limited in size, but in contrast to Lambda@Edge functions, they are also cheaper. Technically speaking, it is a JavaScript function that is ECMAScript 5.1 compliant.

Its classic use-cases are:

- Enrichment of HTTP headers (request & response)

- URL Rewriting

- URL Redirecting based on the language of the user

- Check JSON Web Tokens (JWT) for e.g. timed URLs (without IDP Calling)

Since the CloudFront Function runs directly in the Edge Location, it can only be bound to the Request Viewer and Response Viewer Trigger.

Lambda@Edge Functions

A Lambda@Edge Function, on the other hand, runs “further back” in contrast to a CloudFront Function. The Node.js or Python-based function runs in the regional edge caches and has a maximum execution time of 5 seconds. Limitations like network-, filesystem- or request-body-access are omitted here, also the size restrictions are more flexible.

Their classic use cases are as follows

- Increasing the cache hit ratio through URL normalisation or adjusting the cache headers.

- Dynamic Content Generation: Adaptation / generation of custom content based on the request or response.

- Image scaling (depending on the end device)

- Render pages with so-called

login-less templatessuch as Mustache - A/B testing

- Security: Authentication & Authorisation via e.g.

- Request Signing to Origin (Zero-Trust)

- Token Authentication e.g. via JWT

- Bot Protection and other Security Features & Headers

- Rewriting of URLs (Pretty URLs), Routing, Matching and custom Load Balancing and Failover Logic.

Here you can directly see that the Lambda@Edge Functions are more powerful compared to the CloudFront Functions. In contrast to the CloudFront Function, they can be bound to all four triggers. Further information and details on both functions can be found here.

Security

CloudFront offers a wide range of security functions, also called ‘security at the edge’. There are various access control features such as:

HTTPS: CloudFront can enforce the use of HTTPs.

- Private Content: The publication of content that can only be accessed via “signed URLs” or with “signed cookies” is done directly `at the edge

- Access restrictions to load balancers: CloudFront can define that load balancers can only be accessed via CloudFront, in case of a DDoS attack the load balancers are better protected this way

- AWS WAF: Using the AWS Web Application Firewall, further “rules” can be enforced, to which CloudFront can react with a custom error page, for example.

- Geographical restriction: CloudFront can be used to set that the content may only be accessed from Switzerland, for example.

- Field-Level Encryption: Sensitive data can be encrypted ‘at the edge’ at field level (public / private key).

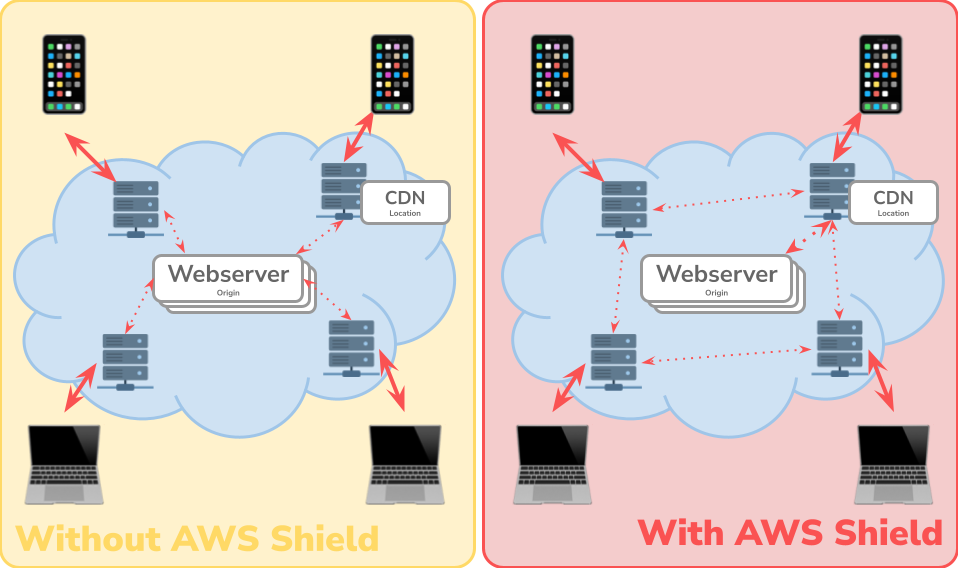

- AWS Shield: This service is automatically activated and protects against DDoS and other attacks (e.g. HTTP Desync Protection) and visualises attacks in a dashboard.

There are also various other security features such as Advanced Ciphers, Protocol Enforcement and others. For example, TLS encrypting can be done directly ‘at the edge’ very close to the user with the AWS Certificate Manager. CloudFront is of course compatible with other AWS services such as the above mentioned AWS WAF, the services can be combined.

Use cases

These components, properties and features result in the following exemplary use cases:

- Deliver static content faster: Images, style sheets, JavaScript files, etc. can be retrieved much faster via Edge Location than directly from the S3 bucket, for example.

- Video and livestreaming: To keep latency low, entire videos or only individual fragments can be cached on the edge.

- Increase security standards through HTTPS, field-level encryption and other features provided by CloudFront.

- Request and response handling through routing, failover, customisation etc. without burdening Origin

- Publish private content through special rules or geo-filters

In principle, CloudFront pursues the overriding goal of every CDN: content should be made available more quickly, the cache-hit ratio should be maximised and the Origin, the actual server, should be relieved.

Outlook

Now we have got to know CloudFront and the theoretical concept behind it.

In my next TechUp, we want to set up a complete CloudFront application with various components and get to know everything hands-on. The aim of this deep dive is to build up practical experience and to get to know the advantages and disadvantages. Stay tuned! 🔥