Cilium is an open-source eBPF-based networking, security, and observability plugin for Kubernetes and other container orchestration tools. Cilium is mainly written in Golang. Behind Cilium is the company Isovalent; the first commit at Cilium is now 6 years ago. Before we take a closer look at Cilium, let’s understand together what eBPF is and exactly what makes it so special.

eBPF

Nowadays, the Linux system is very widespread and deep in the cloud landscape.

Almost every container is based on a Linux kernel.

Topics and tools such as observability & security must always be installed and configured ‘on top’ in the so-called User space of the system.

Unfortunately, in this User Space you have no or only limited access to system and kernel resources.

Certain control mechanisms must always be implemented additionally - for example as a sidecar proxy.

This often results in higher complexity and higher latency.

There is already a way to add functionality to the kernel: Kernel Modules.

However, these are usually difficult to implement and represent a major risk during the operating system’s runtime.

If something goes wrong in the code, the entire kernel crashed and thus also the container to be operated.

eBPF (Extended Berkeley Packet Filter) represents a new way to run code in the kernel. It is also often described as a General purpose execution engine. This gives you the opportunity to teach the kernel new functionalities without endangering the running system.

From a technical point of view, the bytecode of a program or tool in user space is imported into eBPF using bdf() system call.

A JIT-compiler (JIT stands for Just In Time) then compiles this bytecode to a very system-near native machine code.

The program is then bound to specific hooks and events in kernel space and executed there.

You can then bind your own function to each of these hooks and, for example, ban all IPv4 Traffic, or collect constant metrics about running processes.

The so-called Verifier ensures security and stability. Here Infinite Loops, Crashes, etc. are intercepted and traded. Thus, the actual kernel is not affected by errors in the code. In addition, it ensures safety with timely scheduling and other, further methods; so that no memory with sensitive data is released.

Another benefit is access to different kernel helper functions and data types.

There is also a Kernel memory area which can be used to share different key/value data with other eBPF programs or even User Space programs.

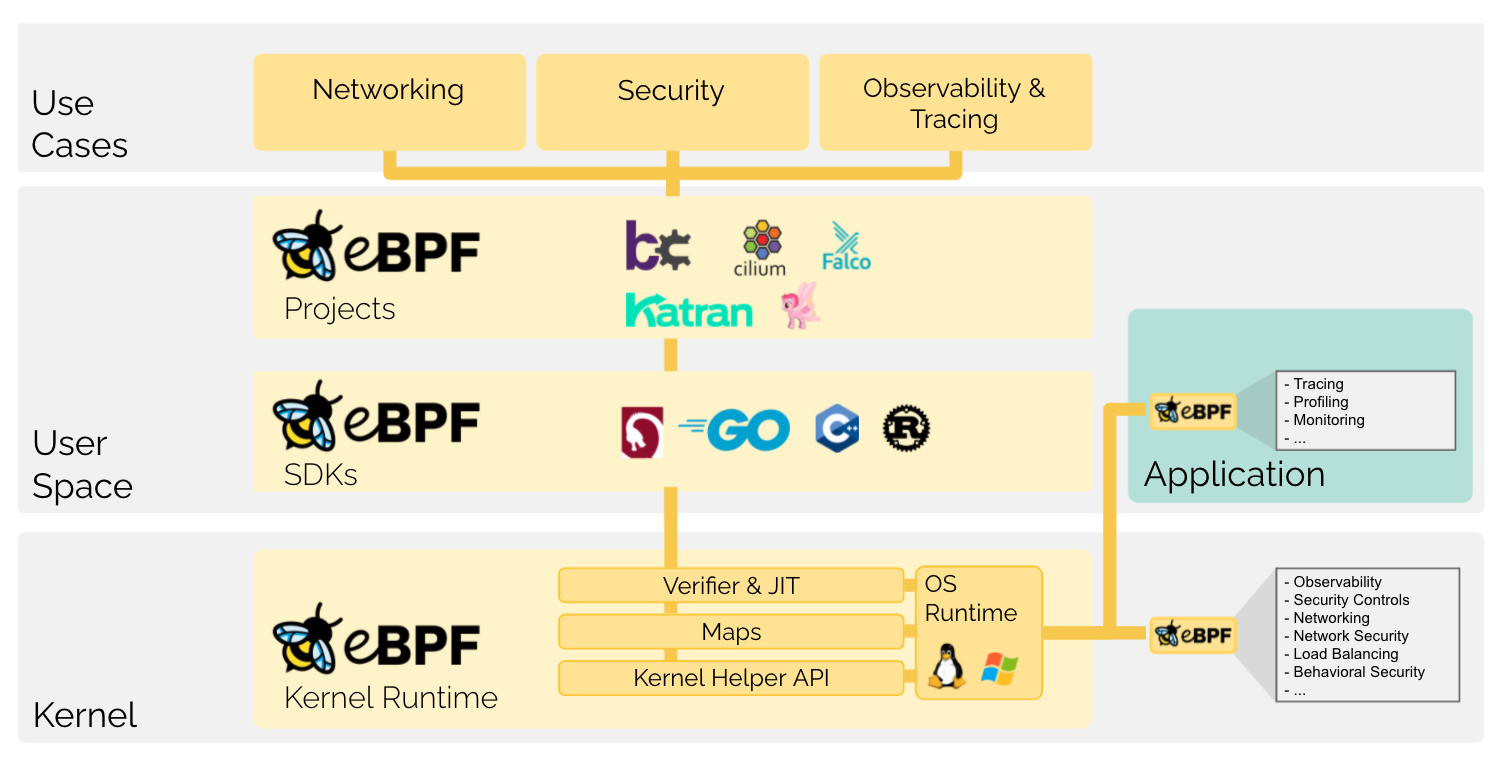

Figure: Source: 09.10.2021 - https://ebpf.io/what-is-ebpf

The areas of application are wide-ranging here, mostly you will find eBPF in topics such as networking, tracing, monitoring & security because you have direct access to the resources.

Big players such as Facebook, Netflix or Google are already using eBPF. Not least because of this, eBPF has become one of the fastest growing subsystems of the GNU/Linux operating system.

Tooling

The tooling in eBPF is very diverse and there are different compilers and toolchains that can, for example, generate ePBF-capable bytecode from a normal C program. Toolchains and compiler targets for other languages such as Python, Golang, Rust are also offered here.

eBPF itself provides a large number of helper functions and programs as interfaces to almost all native components such as storage, hardware, network, and more. The currently available tools can be found at ebpf.io/projects. The number of tools shows very well that the whole system is still very young and is still in a growth phase.

eBPF in Cloud Native Environments

Since, as already mentioned, the Linux kernel is anchored very deeply in the cloud landscape, eBPF is ideal for use in cloud native applications and environments. For example, we ePBF can replace the well-known IP tables, which are slow and sluggish in contrast to eBPF. Likewise, when using a proxy sidecar container with Envoy, for example, the network process can be simplified enormously.

Both allow almost identical functionality, when using eBPF there are ‘under the hood’ much fewer self-implemented processes and complex architectures. The areas of application and use cases are very diverse, since you can create and implement your own functionalities in the kernel (doesn’t matter of on a node or in a container).

Cilium

Cilium is one of the first and most advanced use cases of eBPF, which aims to bring the benefits of eBPF to the Kubernetes world. It addresses the new needs for scalability, security, and visibility that container workloads have today. Basically, the field of application of Cilium can be divided into three large areas:

- Networking

- Observability

- Security

Networking

Cilium acts as a CNI implementation (CNI stands for Cluster Network Interface) which specializes in scalable, very large container workloads. Here we find the classic control, data plane structure again. eBPF (instead of IP-Tables) is used here for load balancing, tracing and ingress/egress rules. Another feature is the service-based local balancing, which also fully relies on eBPF.

Several clusters can be connected with Cilium’s Cluster Mesh: This is used, for example, in the case of failover, recovery or geo-splitting and can be operated without additional gateways or proxies. Technologies such as tunneling or routing are used here.

Observability

Cilium provides network monitoring which, according to its own statements, is Identify Aware. This means information can be logged and tracked far more than just IP. By using eBPF, all Kubernetes labels for internal traffic as well as DNS names or similar can be used. for external traffic. This is especially useful when troubleshooting or analyzing connectivity issues.

In addition, all layer 3, 4 or 7 metrics are also delivered in a Prometheus-compatible format. Additional information about Network Policies is provided here. You can see directly from the Traffic Entry whether something was blocked and by which Network Policy.

Another big advantage is the so-called Api-Award Network Observability.

Traditional firewalls are limited to the IP and TCP layers, while Cilium can provide Layer 7 API-based information on protocols such as HTTP, gRPC and Kafka.

This data is also available for Huddle and Prometheus.

HTTP(S) traffic can also be examined closely using TLS Interception.

Security

Cilium fundamentally implements and expands the Kubernetes Network Policy and also offers, in addition to label and CIDR matching, DNS and API-aware matching. For example, access to certain API paths can be controlled and, if necessary, prevented via Cilium’s Network Policy. This allows only certain services to be released in a real-world scenario, for example monitoring or maintenance endpoints.

The information about all network traffic already described in the previous section can be stored permanently and for a long time for audit or long-term analysis, e.g. in the event of an attack.

Using the so-called Transparent Encyption, Cilium ensures permanent encryption of the traffic within or between the cluster(s).

Here, the kernel’s own feature IP Sec is used, it only requires a one-time configuration in Cilium, no sidecar proxies or application changes.

Hands on

Cilium

Now let’s install Cilium and get to know it a little better, for the sake of simplicity we’ll use a locally installed K8s cluster with minikube.

If Minikube is not already installed, we can install it on macOS as follows:

|

|

Then we start Minikube and install the Cilium CLI. This CLI allows us to install the actual part of Cilium, check the current status of the installation and enable or disable numerous features.

|

|

Now the Cilium CLI is ready and we can install Cilium ourselves in our Kubernetes cluster. The CLI recognizes our locally installed minikube and uses the best possible configuration for it.

|

|

Via cilium status we can see the status of our installation and validate whether everything was installed correctly.

Fortunately, Cilium offers us a command for automated testing of our cluster installation:

|

|

Hubble

Now we want to enable Hubble, Cilium’s observability layer, and get cluster-wide information about the network and security layer. Additionally, here we enable the UI option to have a graphical interface.

In this step, we then install the Hubble client and forward the traffic to Huddle accordingly.

|

|

Now we can check our installation with hubble status and query the so-called flow API with hubble observe to get information about the network traffic.

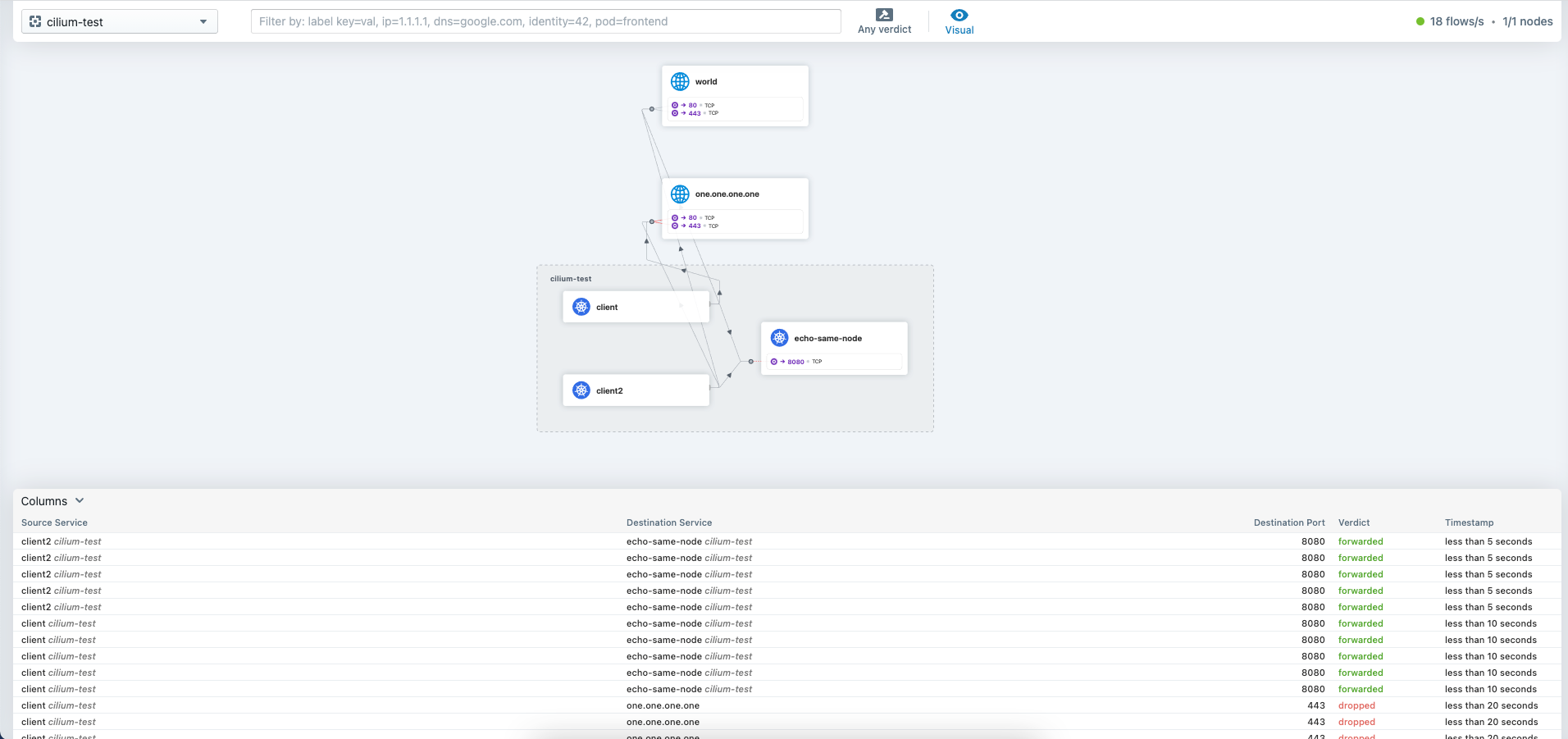

In addition, we can start the UI with cilium hubble ui, which can then be called up under http://localhost:12000/.

Here we can now generate some traffic with cilium connectivity test and take a closer look at it in the UI.

Here we can see at a glance the connections between the individual pods, which calls were successful and other network information.

Now let’s install a demo project and take a closer look at Network Policies and traffic.

We can import and start this directly via kubectl.

|

|

With the following command we can elicit certain information from the Cilium pod, for example, here we get all endpoints back. In Cilium, each pod corresponds to an endpoint.

|

|

The output shows that no ingress or egress policy is currently applied.

This means that all our calls are allowed in the network and we can successfully execute the following two commands.

|

|

Now we get to know Cilium’s first own object, the CiliumNetworkPolicy.

As described above, this can be used to set additional matchings in contrast to the K8s standard NetworkPolicy.

This is how we restrict traffic to the ‘deathstar’ service on Layer 3 & 4.

|

|

We then see that our first call from the ‘xwing’ service to the ‘deathstar’ service now fails with a timeout. In the Endpoint listing, we see that the Ingress policy is now enabled.

In addition to the layer 3 & 4 network policies, layer 7 network policies can also be used to block specific paths.

Conclusion

So what makes Cilium different from other network and observability providers? Unfortunately, this question is difficult to answer because the similar/same functionalities are also offered by other tools such as Istio, Ambassador, etc.

However, Cilium does its job under the hood in a different way, and this is exactly where the big advantage lies: the actual functionality here is much closer to the kernel, faster and the general process is lighter. Whether and when the use of Cilium makes sense or is appropriate compared to other players can certainly be discussed.

This text was automatically translated with our golang markdown translator.