In this TechUp we want to move in the Cloud Dev Ops direction and take a closer look at the Service Mesh Provider Istio.

At the beginning we analyze an underlying problem and how the service mesh principle should help us.

The problem to be solved

Let’s assume we have an online shop with different microservices, which all communicate with each other and are therefore dependent on each other. For example, a product can only be placed in the shopping cart if the cart MS, the inventory MS and the price MS respond correctly.

In the current implementation there are many moving parts, all of which are rigidly interdependent. The individual microservices communicate with each other directly and unsecured via Urls or even IP addresses, there is no load balancing and there is no failure safety. Each of these microservices is implemented independently, the following things are implemented directly in the microservice:

- Communication Configs (Endpoints, Ports etc.)

- Security Handling (Authorization, Authentication)

- Retry Logic

- Logging

- Metrics & tracing

The big problem behind this architecture is obvious, everything is implemented in-house and the microservices are no longer lean, but contain a lot of overhead. Microservices developers can no longer concentrate on the pure business logic, but have to implement things that logging, tracing, etc.

Of course you could use frameworks that offer all these functionalities, but you would still not be technology and language independent.

The solution - Service Mesh

Service mesh is a pattern or paradigm that is used in complex microservice architectures.

In general, the pattern describes that all communication happens via a dedicated, fully integrated infrastructure layer. For example, you can control traffic, implement load balancing or analyze precise monitoring metrics. These implementations are now part of the infrastructure layer of the cluster, so our microservices are now also technology and language independent.

A second big advantage of this paradigm is that the microservices are very lean, only contain business logic and developers no longer have to worry about non-business-relevant implementations.

In a service mesh, a so-called sidecar container is started for each microservice, which communicates with the actual container via localhost. Both containers run in the same pod, all network traffic (incoming & outgoing) runs through the sidecar proxy.

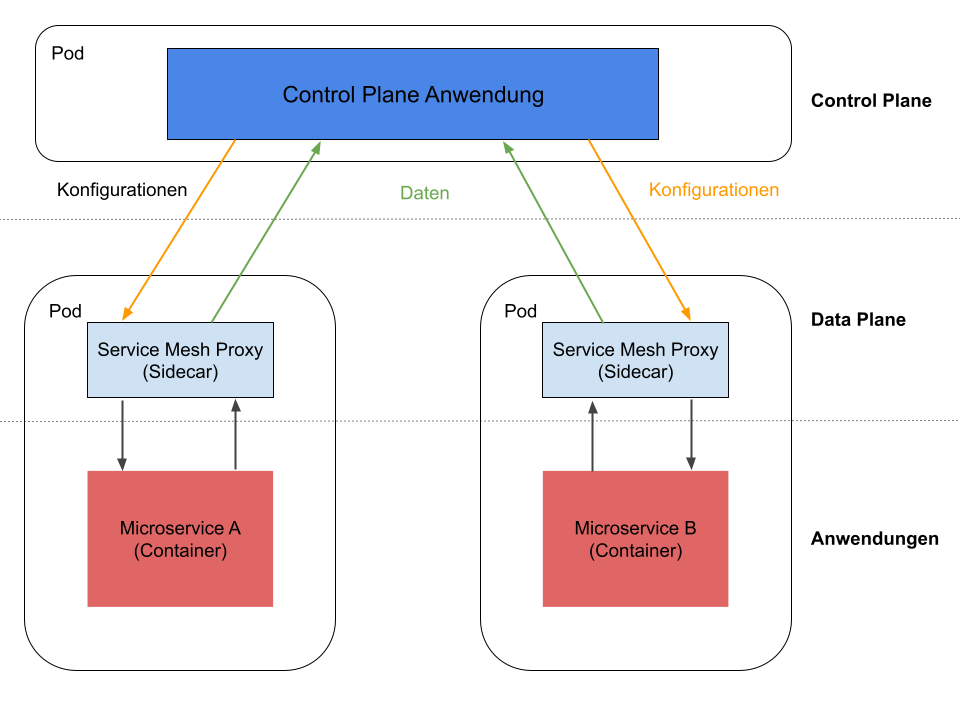

As can be seen in the graphic above, a service mesh can be divided into three parts:

- Control Plane - the management and configuration layer of the service mesh architecture, deploy and configure the sidecar proxies and collect all data

- Data Plane - the proxy layer, where intelligent sidecar proxies take over the communication between microservices

- Applications - the application layer where the actual microservices run

But what are the exact advantages of a service mesh in practice?

- Canary Releasing - with new versions only direct part of the users to the new version in order to test new functionalities in isolation, for example only a certain browser version

- A / B-Testing - simple routing of certain users in certain cases to another version, routing can be done on basic from Urls or even HTTP headers

- Security - all traffic in the cluster is encrypted, everyone is correctly authenticated and authorized

- Transparency - extensive monitoring, logging and tracing of all calls in the cluster as well as communication between microservices (keyword identify bottleneck)

- Robustness - timeout handling, retry mechanism, circuit breaking etc.

Of course, the service mesh pattern is not the only approach to solving the problem. For example an API gateway could be another solution but do not follow the decentralized approach of microservices.

Istio

Istio is an open source service mesh platform that controls how microservices share data with each other.

The foundation stone was laid with the first commit on November 19, 2016, in cooperation with Google, IBM and Lyft (Uber competitor). Today, more than 15,000 commits later, version 1.9 of Istio has been released and is constantly being further developed by a broad community. If you take a look under the hood, you can see that Istio itself is almost exclusively written in Go.

There are currently many different Service Mesh implementations or platforms, with Istio becoming more and more popular and known as the most popular Service Mesh platform.

What exactly is Istio doing?

Simply put, Istio attaches a small sidecar container to each microservice, which takes care of topics such as communication, security, metrics & logging, etc. Istio itself can not only be used with Kubernetes, for example Istio can also be used in combination with Nomad and Consul or even with on-premise systems such as VMs.

The platform basically consists of two components, the istiod control plan and the Envoy proxy.

However, the complete structure offers numerous integration options, for example a monitoring system such as Prometheus, a dashboard such as Grafana or a tracing system such as Jaeger can be integrated.

#Istio in practice Now we dare to do the ‘Deep Dive’ in practice and install the Istio sample project, we are using the latest Istio version 1.9.4

If we follow the steps on the getting started page from Istio itself, we have an Istio Service Mesh ‘up and running’ within a few minutes. We used our Kubernetes playground on a digital ocean cluster for this.

In this example we use the ‘bookinfo’ example from Istio itself, this is a good example to get to know Istio with all its components.

In principle, every Kubernetes microservice project can be converted into an Istio Service Mesh. This is done via a specific label on the namespace so that Istio knows that Envoy Sidecar proxies should be injected. Here in the example we activate the Istio injection for the default namespace:

|

|

In general, there is no need to adapt to the actual K8s resources or the application.

It only has to be ensured that the K8s resources can always be identified via the label app.

As soon as the complete ‘bookinfo’ example is running, let’s take a closer look at two Istio resources:

- Gateway: the entry point from the outside into the service mesh, more precisely this is the Istio-Ingress Gateway

- VirtualService: - responsible for the routing within the service mesh

In the bookinfo example we find these two resources in the file samples/bookinfo/networking/bookinfo-gateway.yaml, a gateway to the outside on port 80 is opened there by default.

With the VirtualService, certain routes are forwarded to our K8s productpage service on port 9080.

We now want to adapt this example and achieve that our sample application can only be called from a specific host and only with a specific cookie.

For this we only have to adapt the virtual service definition, our match clause makes a new regex matching on the HTTP header cookie.

We also restrict the hosts so that only a certain host can call the application.

|

|

After applying the new configuration with kubectl apply -f samples/bookinfo/networking/bookinfo-gateway.yaml, our sample application can only be called via a special command.

We use an HTTP scratch file in IntelliJ, it looks like this:

|

|

With this we meet both conditions of our virtual service and are correctly forwarded to our productpage application.

If there are any errors here, the Kiali dashboard of the example will help us to see more detailed logs, traces, etc. We can start this dashboard after the rollout with:

|

|

Another useful feature of Istio is the analyze function, which shows us errors or problems in our configuration:

|

|

#Is there a catch? It sounds great, installs quickly and looks very practical - what’s the catch?

The catch with every service mesh implementation is the additional mental, physical and logical effort, which is caused by the use of e.g. Istio is created. Also not to be ignored is the fact that the performance will deteriorate and the cluster will need more resources. It is important to understand why and, above all, whether you really need a service mesh architecture.

In general, a service mesh makes sense in growing, increasingly complex microservice architectures. What is certain is that Istio makes the analysis a lot easier and accelerates it in the event of an error.

Do you need help with your Service Mesh implementation or do you have further questions? Contact us!

This text was automatically translated with our golang markdown translator.