For me personally, the year 2022 is all about distributed systems. For this reason, in a new TechUp series, I will deal with distributed systems in general, as well as their conception with Elixir and its ecosystem. First, we will focus on Elixir in order to get a practical grasp of the basic concepts of distributed systems using a programming language. After that, we will look at theoretical concepts as well as more advanced topics around distributed systems. This will be divided into the following two TechUp series:

- Elixir Series

- Distributed System Series

Last year at b-nova we took a close look at many new technologies and especially trendy programming languages like Kotlin, Go and Rust. With Elixir we have another programming language at the start, which is not only characterised by being the newest programming language in terms of release time, but also, apart from Rust, has a completely new paradigm of functional programming, as well as an actor-based runtime. Two features worth mentioning, which should not be left unemphasised. The Elixir Series will be split into three parts, as I would like to shed a little more light on the concepts behind Elixir, so that the benefits Elixir brings with it can be better understood and absorbed. Let’s perhaps take a step back and start with the beginnings of the language.

The demonym of an Elixir developer is quite intuitively an Alchemist. ⚗️🤓

I. From Erlang to Elixir

Elixir itself is not necessarily known to every developer, but its predecessor and forefather Erlang is. If one wants to understand Elixir as a programming language and its innovative possibilities, it certainly makes sense to try to understand Elixir in the context of Erlang. So we have to go back in time about 35 years, to the end of the 1980s, shortly before the internet was able to establish its first intercontinental connection.

In 1986, engineers at the Stockholm-based telecommunications company Ericsson were faced with a crucial problem. They wanted to enable reliable digital telephone switching, i.e. the connection of calls, which until then had been done manually in telephone exchanges. The most important criterion of this application was no downtime in order to ensure uninterrupted telephony. This prompted Joe Armstrong to develop a new programming language. Today we know this programming language as Erlang, which stands for Ericsson Language. The properties that Erlang and its runtime have were not predetermined at that time and are a product of the actual initial problem, namely to ensure reliable operation of control centres. The resulting properties can be summarised to the following 4 key points:

-

Green Threads: Threads are scheduled by the VM and not by the operating system.

-

Advanced Message Queueing Protocol (AMQP): Open Standard Application Layer Protocol for Message-oriented Middleware.

-

Continuous Delivery: Short roll-out cycles.

-

Functional Programming: Functional paradigm.

From today’s perspective, it quickly becomes clear that Erlang already had features at the start that we regularly make use of today. Joe Armstrong also recognised this and made the following statement at an ElixirConf a few years ago:

“The rise in popularity of the Internet and the need for non-interrupted availability of services has extended the class of problems that Erlang can solve.” - Joe Armstrong (†2019)

If you want to know more about the history and evolution of Erlang, I highly recommend the article History of Erlang and Elixir by Serokell.

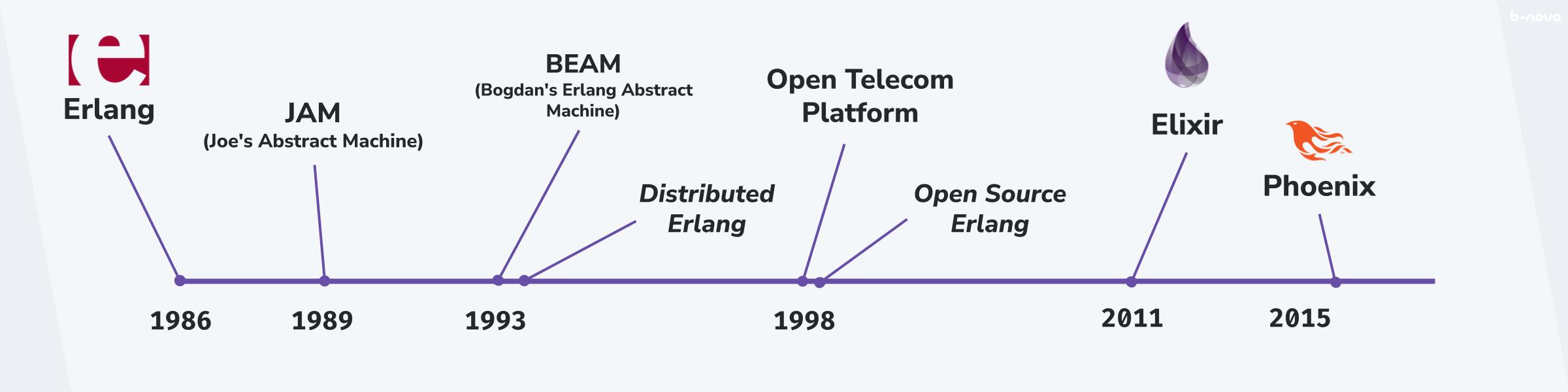

The history of Elixir begins with the development and deployment of Erlang (1986), and the first full-fledged virtual machine, Joe’s Abstract Machine (1989), named after its developer Joe Armstrong. This virtual machine was then completely rewritten and has since been called BEAM, short for Bodgan’s/Björn’s Erlang Abstract Machine. This runtime was further developed in such a way that it was possible to connect several instances of the runtime together and distribute the individual processes across the whole cluster of runtimes. This networking capability is marked Distributed Erlang at the top of the timeline here. The Open Telecom Platform, or OTP for short, is the totality of all the tooling, libraries and standard functions in the Erlang landscape. Erlang, as well as the OTP were made Open Source in 1998 and are thus freely available to the public.

It took a little more than 10 years for Elixir to see the light of day, which is basically a re-release of Erlang and its OTP platform. Elixir re-stages the Erlang language with a Ruby-like syntax and offers even more comfort through refined tooling. The core, however, remains BEAM and the OTP. Phoenix is Elixir’s web framework par excellence and just as important to Elixir as Ruby on Rails is to Ruby.

Before we go further into Elixir, I’d like to briefly show some Erlang code. Here I have a Hello World snippet in Erlang for you at the start.

hello-world.erl

|

|

In summary, it can be said that Elixir is primarily a further development and modernisation of the Erlang programming language and runs on the same interpreter, the Erlang Virtual Machine, as Erlang and also uses the same development tools of the Open Telecom Platform.

Installation and tooling

Since we are about to do a practical example of BEAM, we must first install the necessary tools. In a first step, we will set up Elixir and Erlang, both of which will be installed separately. Here we will use MacOS as the target operating system, but the installation can also be done on Windows, GNU/Linux or Unix variations like BSD. Just follow the respective public documentation of Elixir, as well as Erlang.

Elixir can be easily installed on MacOS with Homebrew.

|

|

Alternatively, you can have Elixir built for you using sourcecode.

|

|

For containerised build pipelines, Elixir provides official Hex.pm Docker images. More information on installation, for example for alternative operating systems like Windows can be found here. The installation includes 4 executables:

-

elixir: The runtime -

elixirc: The compiler -

iex: Acronym for Interactive Elixir and thus REPL for the language -

mix: The build tool

In addition to the executables, there are Elixir-specific modules such as ExUnit, EEx, Logger, as well as the Standard Library. Since Elixir makes extensive use of the OTP, we now also have to install Erlang. This can be done very quickly with a package manager like Homebrew:

|

|

At the time of writing this TechUp in March 2022, the latest Elixir version is 1.13.3. Thus, we now have this version along with Erlang/OTP version 24 at the start.

|

|

For Elixir there is also a package manager called Hex, which we can install here with Mix. With it we can install and manage external dependencies like Phoenix.

|

|

Now we are ready and armed with all the necessary tools to learn more about Elixir, Erlang, OTP and the BEAM. Let’s do this! 😄

II. The BEAM

As already mentioned several times, Elixir, just like Erlang, uses a runtime at runtime, which is also often called Erlang Virtual Machine. The official name, however, is BEAM and is an acronym for Bogdan/Björn’s Erlang Abstract Machine. Bogumil “Bogdan” Hausman wrote the first version of the runtime. Björn Gustavsson is the current operator and maintainer of today’s BEAM codebase. Both worked on BEAM as employees of Ericsson. The first categorisation I would like to mention here is the fact that BEAM is considered a register-based virtual machine, whereas the well-known JVM, for example, has a stack-based architecture. But this is not the only important difference between the BEAM and other conventional runtimes. Therefore, let’s take a closer look at the soul of the Elixir programming language, the BEAM.

💡 For the curious:

Register-based Virtual Machines use the CPUs operand register for data processing and thus have less overhead, potentially making them a bit faster in execution rate. Example of a register operation:

ADD R1, R2, R3

A stack-based virtual machine, on the other hand, uses the conventional way of executing machine code, namely the stack, and accordingly must contain the whole operation, resulting in more overhead. Example of a stack-based operation:

1: POP 20

2: POP 7

3: ADD 20, 7, result

4. PUSH result

Here is a related article about Elixir and the Beam.

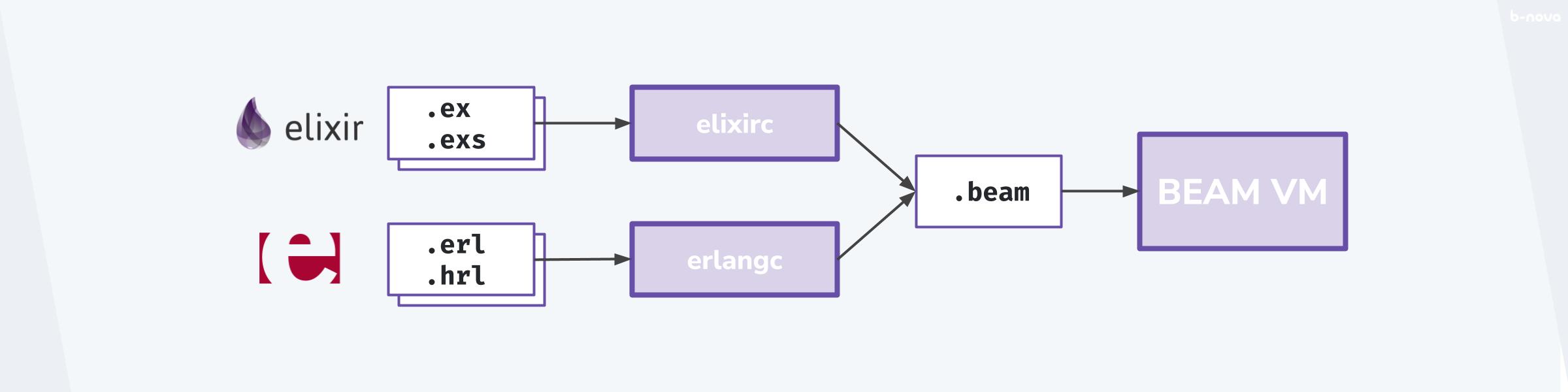

The BEAM interprets .beam bytecode and behaves not unlike the JVM, which interprets and executes compiled .class bytecode. There are two programming languages that have the BEAM as a target system; Erlang and Elixir. Both languages have source extensions which are compiled by the respective compiler, either elixirc or erlangc, to .beam bytecode. The extensions for Elixir are the following two:

.ex: Simply stands for Elixir and is typically source code of the actual application..exs: Stands for Elixir Script and is compiled at compile time, but can be interpreted directly via REPL and serves as a marker for execution at build time.

Beam bytecode can also be broken down, which would look like this for example:

|

|

Distributed System

Joe Armstrong realised early on that parallelisation of processes is the main feature of robust and fault-tolerant systems.

Initially, I wasn’t really interested in concurrency as such, I was interested in how you make fault-tolerant systems. A characteristic of these systems were that they handle hundreds of thousands of telephone calls at the same time. - Joe Armstrong (†2019)

The term Concurrency-Oriented Programming would certainly be appropriate here. Another insight Joe highlights in this context is the fact that systems are best conceptualised by taking the real world as a model and trying to represent it as best as possible in the abstract programming world.

The world is parallel. If we wish to write programs that behave as other objects behave in the real world then these programs will have a concurrent structure…People function as independent entities that communicate by sending messages. That’s how Erlang processes work… Erlang programs are made up of lots of little processes all chattering away to each other - just like people. - Joe Armstrong (†2019)

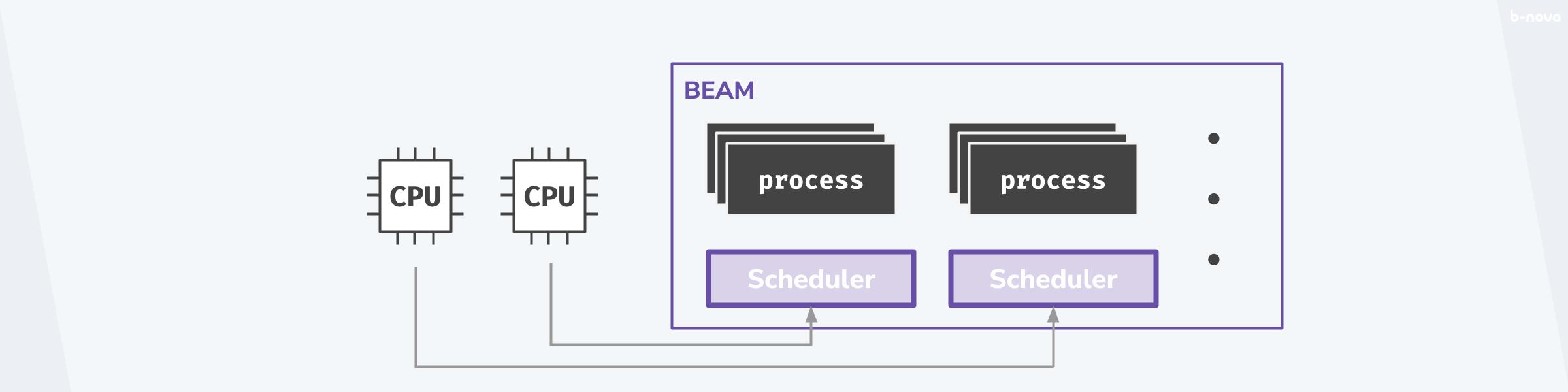

The BEAM is responsible for parallelising processes, which is called scheduling in OTP slang, just like in container orchestration with Kubernetes. For each CPU core, one OS thread is made available by the BEAM, on which Erlang processes are then distributed at runtime. This scheduling process, together with the number of CPU cores, which can be further increased in the network, forms a cluster with which the parallelisation of processes is made possible.

Demonstration of the BEAM

There are many prominent promoters in the Erlang/Elixir community who promote the whole BEAM ecosystem and demonstrate its benefits at the various meetups and conferences. One of them is Saša Jurić, sometimes better known by his blog title The Erlangelist. Saša gave a talk at GOTO 2019 titled The Soul of Erlang and Elixir, wherein he presented BEAM in a practical way and succinctly summed up its key features in just one hour.

In the context of my TechUp, I will try to demonstrate these points following his example and hope to make the BEAM more attractive by doing so. There is an unofficial repo that is supposed to recreate Saša’s demo server. This can be downloaded and installed via Git using these instructions.

|

|

💡 Since the above repository is based on an older codebase and thus uses outdated versions of the runtimes in some cases, there is a shell utility designed for this called asdf. This is a so-called version manager with which you can easily switch to an old version of Elixir, Erlang or Node.js in the context of a project or directory.

The versions are stored in a .tool-versions file in the target directory. Now you have to make sure that you have installed the plugins for the respective runtimes, for example nodejs. Then simply execute the $ asdf install command in the project directory and enjoy the desired runtime version.

Let’s start the example_system server with mix as follows:

|

|

The server starts a locally callable interface, which can be called under localhost:4000. There you will find a field where you can make a calculation using a number input field. Under http://localhost:4000/load you can see the server load.

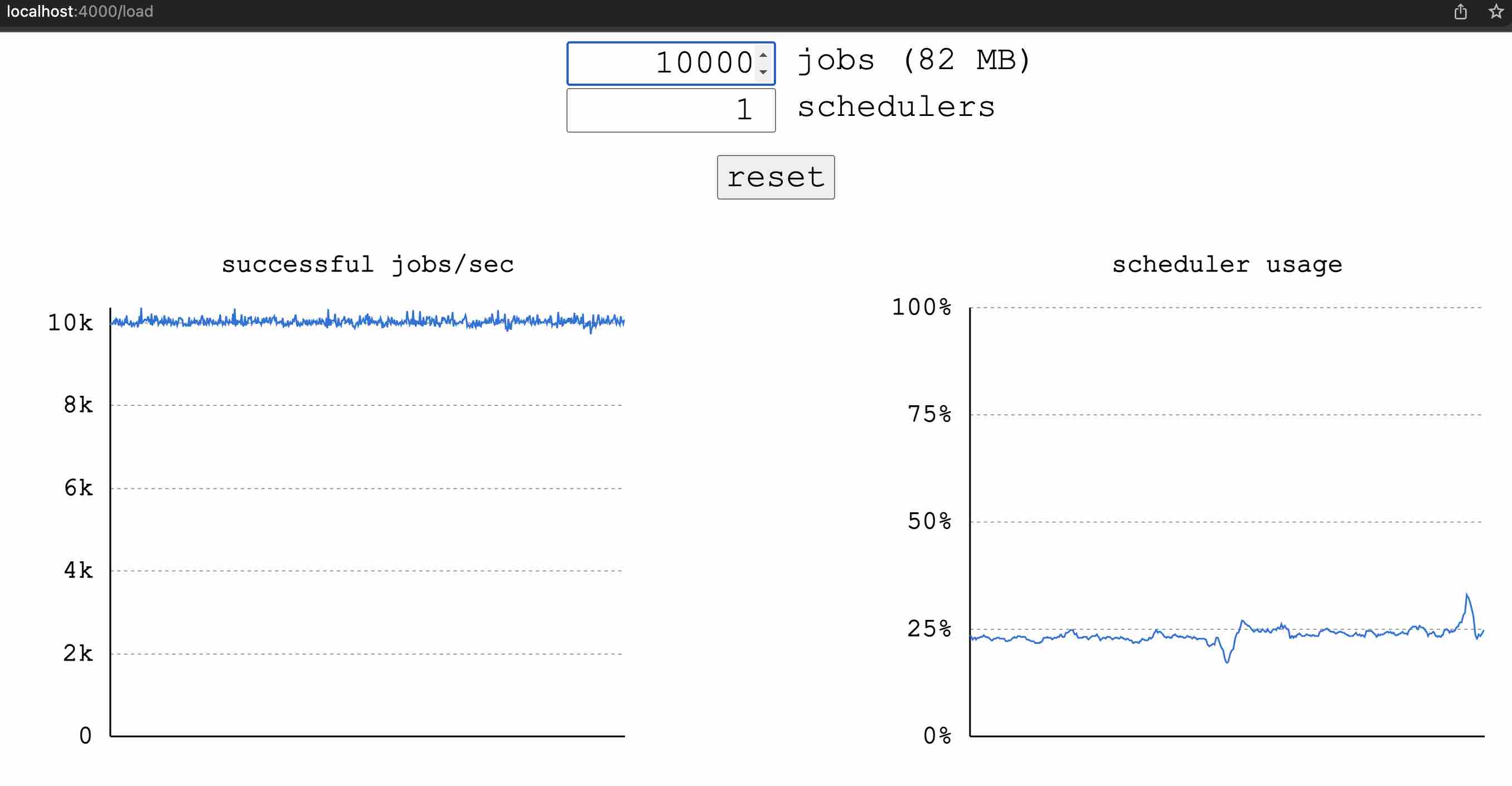

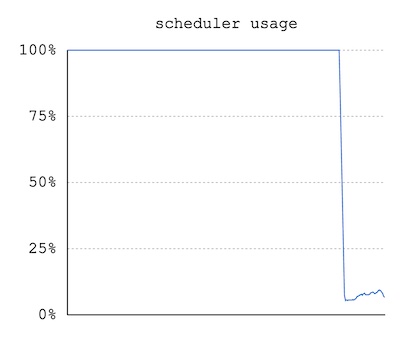

As we can see, we can start an arbitrary number of jobs, which perform a calculation in the background and return a success status, which is shown in real time in the graphic on the left. We crank this number up to 10'000 and see that a scheduler can cope with this. A scheduler here is a thread on which our jobs run. In addition, we need to know that a thread in the BEAM is always assigned to a CPU core. With the new M1-based MacBook Pro, I have 10 CPU cores at my disposal and could increase the number of schedulers, which corresponds to the number of cores used, up to 10.

If we now simply initiate a manual calculation in the input field view under ‘http://localhost:4000’, the CPU is used depending on the numerical value. With the value 1000, everything is still green and we also see that this has no significant influence on the basic load of 10,000 processes. If we choose a high enough number, for example a value of 9999999 (~10^9), it takes my M1 beast up to 3 seconds to get the result. With higher values, we can assume a proportional increase of the calculation time, because it is obvious in the output that the summation of all numerical values between 1 and the input value is carried out, i.e. ∑(1 + 2 + … + 999999999).

Something is wrong

When trying it out, one quickly notices; the value 13 provides a technical error and minus values take forever. With the minus values it gets exciting, because they obviously run infinitely long once you have entered one. So we come to our actual use case and the practical part of the BEAM presentation. Let’s get to the bottom of this misbehaviour.

To do this, we look at the REPL to see what processes are running. This can be done easily with the Process module:

|

|

💡 Arity is an important term in Erlang and Elixir and denotes the number of parameters a function can take. Elixir can overload functions, but arity is always indicative of a given function. For example, the function Process.list/0 has an arity of 0. So functions are always denoted by a slash and the arity value.

If you take the output of Process.list/0 and want to represent its head in isolation, you can do so quite functionally as follows:

|

|

The reductions\ field enumerates the iterations of the process and is thus perfect for finding out whether a given process needs a lot of CPU power. The time difference between a reductions\ value t1 and t2 is the measure of this CPU usage. Of course, we now want to find out this information about all the processes present at a given time and sort them by value. In a custom module called Runtime exactly this function Runtime.top is mapped.

|

|

Now we put the most powerful PID into a variable with the same name.

|

|

💡 In Elixir, an atom is its own specific and important data type. By definition, an atom is a constant whose value is also its name. Atoms are always used where an explicit value is programmatically known in advance and is explicitly embedded as such. You can recognise atoms by the fact that the value is always quoted with a semicolon. Example: :current_stacktrace

With the Process module we can filter out the stacktrace of the process. To do this, we also use the atom :current_stacktrace as the second parameter value.

|

|

There is also a function in our runtime module that traces the trace output up to 50 traces.

|

|

Here we can easily see that a function calc_sum from the ExampleSystem.Math module is always called.

|

|

After the kill-9, we see that the total CPU usage drops abruptly and the most power-intensive process has thus been correctly identified and terminated.

From the original trace output we know where to look for the error, namely in lib/example_system/math.ex. But before we jump into math.ex, let’s see if we can find something about Math on the web. Maybe we can already adjust something in the UI so that you can’t enter the value at all. You search and you find it: There is an lib/example_system_web/math/sum.ex which has the following start_sum function:

|

|

The problem is that the function does not take into account the possibility that there can also be numbers less than or equal to 0 as input and not only natural numbers. This can be fixed by checking for this case. In a comment, exactly this mechanism is already given and the code can easily be commented out to fix this.

|

|

This change is automatically recognised by Phoenix, recompiled and reloaded. Then you can look and see! When negative numerical values are input, “invalid input” is output as the answer.

So, back to our Math module, something is obviously still wrong there. In math.ex there are the following 4 private calc_sum functions with different arities.

|

|

Here we can already see that the value 13 is caught beforehand and a technical error is thrown with raise("error"). Furthermore, the whole logic seems a bit suspect and possibly inefficient.

|

|

If we want to test this, we can have the logic tested again.

|

|

And roll out the compiled changes to the running cluster.

|

|

Alternatively, if you are still logged into the REPL, you can compile the project again and let the changes roll out automatically.

|

|

Now the error when entering negative numbers, as well as the performance of the calculation should be much better. Well done! Let’s keep certain points for the BEAM. 🚀

BEAM in a nutshell

The Erlang Virtual Machine provides a variety of unique features that you will look for in vain in other stacks. These features can be summarised as follows:

- A distributed system from the ground up (distributed).

- Can handle faulty processes, i.e. it is fault-tolerant.

- Handles processes in an equal way so that everything can be processed as quickly as possible (soft real-time)

- Ensures that everything is always running and scaled (highly available, non-stop application)

- Bytecode can be replaced with new code at any time (hot-swappable)

Conclusion

Today we have taken a brief historical overview of the emergence of the Elixir programming language, the foundations of which were laid as early as 1986 with the publication of the Erlang programming language developed by Joe Armstrong. In addition to installation and tooling, we also introduced BEAM, Elixir’s runtime, demonstrated how to fix a bug and rolled out the improved code to the running cluster. The advantages that Elixir brings in combination with BEAM are immense and should make every developer take a closer look.

In the next part of the Elixir series, we will get a deeper insight into the principles of Elixir programming and deepen the new knowledge directly with an example.